Last Update: February 5, 2025

BY eric

eric

Keywords

Stable Diffusion Parameter Guide

Stable Diffusion is a powerful AI image generation model that transforms text prompts into high-quality visuals. To get the best results, it's essential to understand the various parameters that influence image generation. This guide explains key parameters, their impact, and how to fine-tune them for optimal results.

Classifier Free Guidance (CFG)

CFG controls the balance between creativity and prompt adherence. The default value is 7. Lower values increase randomness, while higher values ensure stronger adherence to the prompt. This parameter does not affect VRAM usage or generation time.

CFG 2-6: High creativity, but less aligned with the prompt.

CFG 7-10: Recommended for most cases, balancing creativity and control.

CFG 10-15: Useful when the prompt is highly detailed and specific.

CFG 16-20: Not recommended unless the prompt is exceptionally detailed, as it may reduce coherence and image quality.

Steps (Sampling Steps / Precision)

Step (Sampling Steps) has a default value of 50. Stable Diffusion begins with a noise-filled canvas and gradually removes noise to produce the final image. The Step parameter controls the number of denoising iterations. Generally, the higher the step count, the better the image quality. Steps do not affect VRAM usage, but generation time increases proportionally.

For beginners, using the default value of 50 is recommended.

Higher steps = better image quality but longer generation time.

Lower steps = faster generation but may result in less detailed images.

Higher step counts (75-100) may improve fine details but with diminishing returns beyond a certain point.

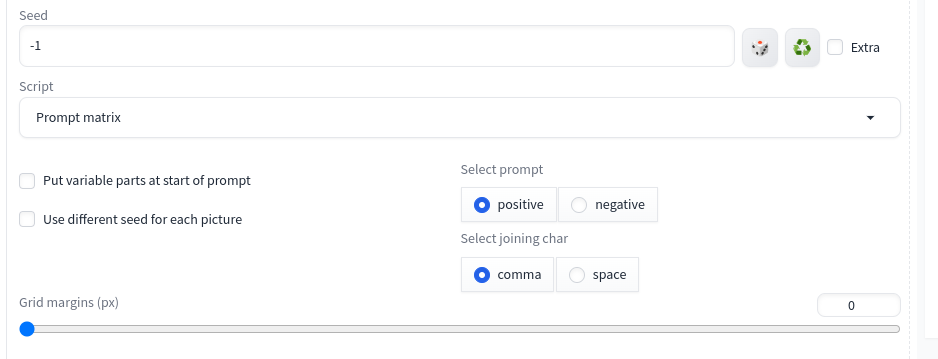

Seed (Random Seed Control)

The Seed parameter determines the initial noise used for image generation. The default value is -1, meaning a random seed is used for each generation.

Using the same seed with identical prompts and settings will reproduce the same image.

Changing the seed results in a different image, even with the same prompt.

If a particular seed produces high-quality results, it can be saved and reused.

Sampler (Diffusion Sampler Method)

The Sampler determines how Stable Diffusion removes noise during image generation. Different samplers use different mathematical methods to estimate the next denoising step, impacting the final image’s smoothness, detail, and coherence. Some samplers require more steps for high-quality output, while others can achieve good results with fewer iterations.

Common Samplers and Their Effects:

-

DDIM (Denoising Diffusion Implicit Models): Fast and efficient; requires fewer steps (10-25) but may sacrifice fine details.

-

PLMS (Predictive Linear Multistep): Faster than DDIM but sometimes struggles with detailed features.

-

Euler / Euler A (Ancestral): Sharp and well-defined images; Euler A adds randomness, making results more varied.

-

Heun: Balances detail and smoothness.

-

DPM++ (Denoising Probabilistic Models): Advanced sampler offering high-quality outputs with fewer steps.

-

LMS (Linear Multistep): Provides smooth gradients and balanced details.

Choosing a Sampler:

If speed is a priority, use DDIM (fewer steps, faster results).

If detail is important, use Euler or DPM++.

If experimenting with creative variance, use Euler A or PLMS.

Experimenting with different samplers may yield varying results in style and detail.

Here are the recommended samplers for different styles.

Text Prompt for Image Generation

Stable Diffusion generates images based on textual descriptions, determining what appears in the final image. A well-structured prompt significantly impacts output quality.

General Structure of a Prompt

Below are key elements to consider when crafting prompts:

-

Subject: Clearly specify the main object (e.g., a dog, a cat, a bear, a warrior with a sword).

-

Image Type: Define the medium: painting, photograph, watercolor, oil painting, comic, digital art, illustration, 3D render, sculpture, etc.

-

Artist/Style: Combine multiple artists or describe the style explicitly (e.g., "Studio Ghibli + Van Gogh" or "very coherent symmetrical artwork").

-

Color Scheme: Define the dominant colors (e.g., "blue color scheme").

-

Reference Platform: Mention where similar styles appear (e.g., "using styles from the trending on CIVITAI").

Example Prompt #1:

A photograph of a red haired woman, realistic face, symmetrical, highly detailed, ultra HD using the style trending on CIVITAI.

Example Prompt #2:

A photograph of a dark haired woman, realistic face, symmetrical, highly detailed, ultra HD using the style trending on Pixiv.

Advanced Prompting Techniques

To improve generated images, add detailed descriptions:

-

Secondary Elements: Add 2-3 additional objects (e.g., "beautiful forest background, desert!!, (((sunset)))").

-

Character Features: Add details like "detailed gorgeous face, silver hair, olive skin, delicate features".

-

Special Effects: Use terms like "insanely detailed, intricate, surrealism, smooth, sharp focus, 8K resolution".

-

Lighting Effects: Specify lighting conditions (e.g., "Natural Lighting, Studio Lighting, Cinematic Lighting, Backlight, Rim Light").

-

Camera Angles: Use photographic terms (e.g., "Cinematic, Golden Hour, Depth of Field, Side-View, Bokeh").

-

Camera Position: Specify the location (e.g., "Front View, Back View, Left View, Right View").

-

Camera Type: Mention the camera model (e.g., "Canon 70D, Nikon D850, Fujifilm X100, Fujifilm XT3").

-

Image Quality: Ensure high fidelity by adding "professional, award-winning, groundbreaking, ultra HD, film grain".

Example Prompt #3:

a young woman with gorgeous face, delicate features, symmetrical, highly detailed

Prompt Weighting

Stable Diffusion allows weight adjustments to control element emphasis:

Increase prominence: (element:10), e.g., A planet in space:10 | bursting with color:4 | aliens:-10.

Decrease prominence: (element:-10), e.g., tree:-10 ensures no trees appear.

Word order matters: Earlier words have higher weight. If an element is missing, move it to the beginning.

Negative Prompts

Negative prompts exclude unwanted elements from the image. This is useful for avoiding distortions, unwanted themes, or specific artifacts.

Common Negative Prompts

-

Fix anatomy issues: disfigured, deformed hands, blurry, grainy, broken, extra digits, bad anatomy.

-

Avoid nudity: nudity, bare breasts.

-

Avoid black-and-white images: black and white, monochrome.

For example, same Prompt as Example #1 with Negative Prompting:

Extra heads, extra faces, multiple faces, extra eyes, deformed face, disfigured, asymmetric, duplicate, tattoos, marks

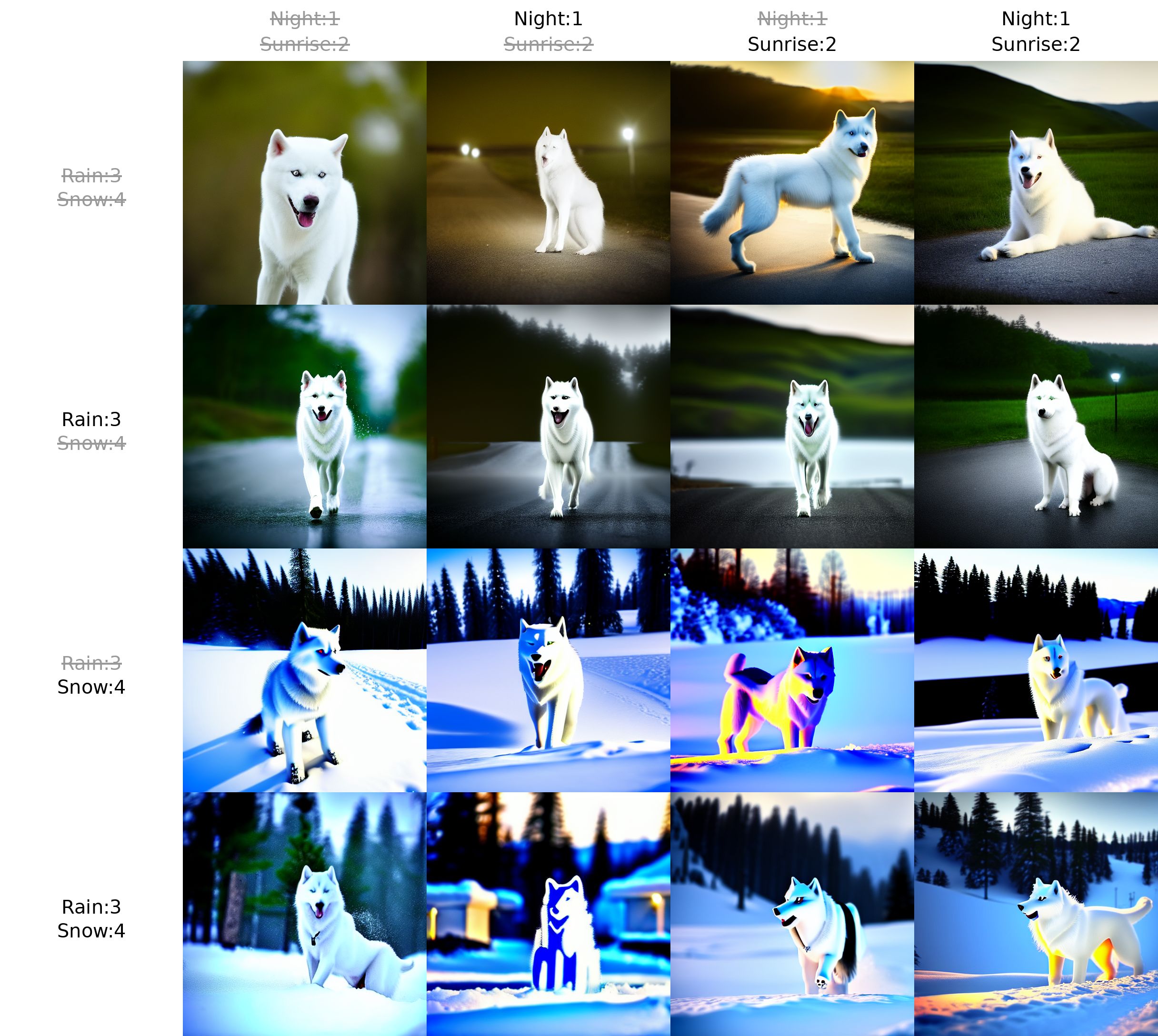

Prompt Matrix (Variations in Image Generation)

The Prompt Matrix function generates multiple variations of an image by combining different elements. This is particularly useful for creating assets for animations or video sequences.

In a prompt:

-

The elements that remain constant across all images in the matrix are enclosed in <angle brackets>.

-

The elements that vary between images are preceded by a pipe character (|). This character is later replaced with either a comma or a space, depending on the script's configuration.

For example, generating an image sequence for a white dog like husky with different lighting conditions:

<a white dog like husky, 4k, > lights | Night:1 | Sunrise:2 | Rain:3 | Snow:4

This will generate different versions of the same image under various lighting conditions.

If you are on Automatic1111, you can select the Prompt Matrix function by clicking on the "Script" button under the "Seed" section.

If you're interested in converting images into animations, consider using Deforum Stable Diffusion.

Conclusion

Understanding Stable Diffusion’s parameters enables precise control over image generation, improving quality and efficiency. By experimenting with different CFG values, steps, samplers, and prompt structures, users can fine-tune their creations to achieve stunning results.

For further enhancements, tools like PromptHero, OpenArt, and PromptMatrix can help you find existing prompts and discover new creative possibilities. If you need to construct a new prompt from scratch, consider using an Text-to-Image generation prompt helper like we provide at Tynion.

Previous Article

Feb 06, 2025

Creating a Human Portrait with AI: Which Model Does It Best?

In this article, we compare four popular AI-powered tools - Stable Diffusion, Ideogram, FlexClip, and ChatGPT - to see which one delivers the best results in generating human portraits.

Next Article

Feb 05, 2025

Using Stable Diffusion WebUI Docker for Image Generation – A Newbie's Guide

In this article, I will guide you through installing, configuring, and using Stable Diffusion WebUI Docker to streamline your image generation workflow.