Last Update: February 28, 2025

BY eric

eric

Keywords

Exploring the Best Free and Open-Source Chat UIs for LLMs

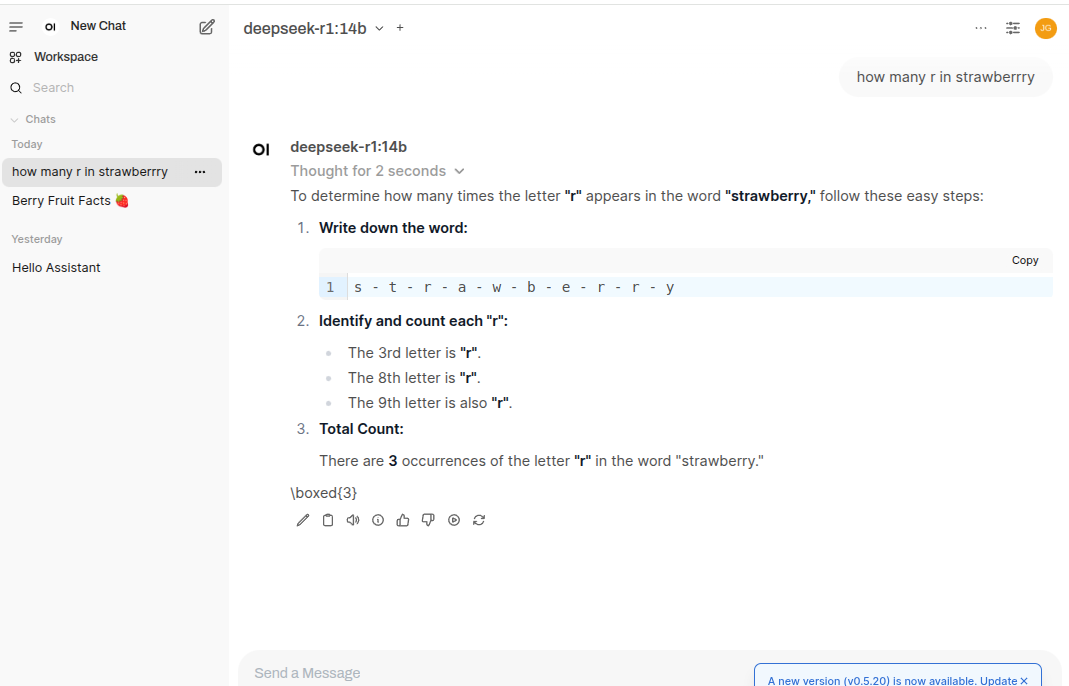

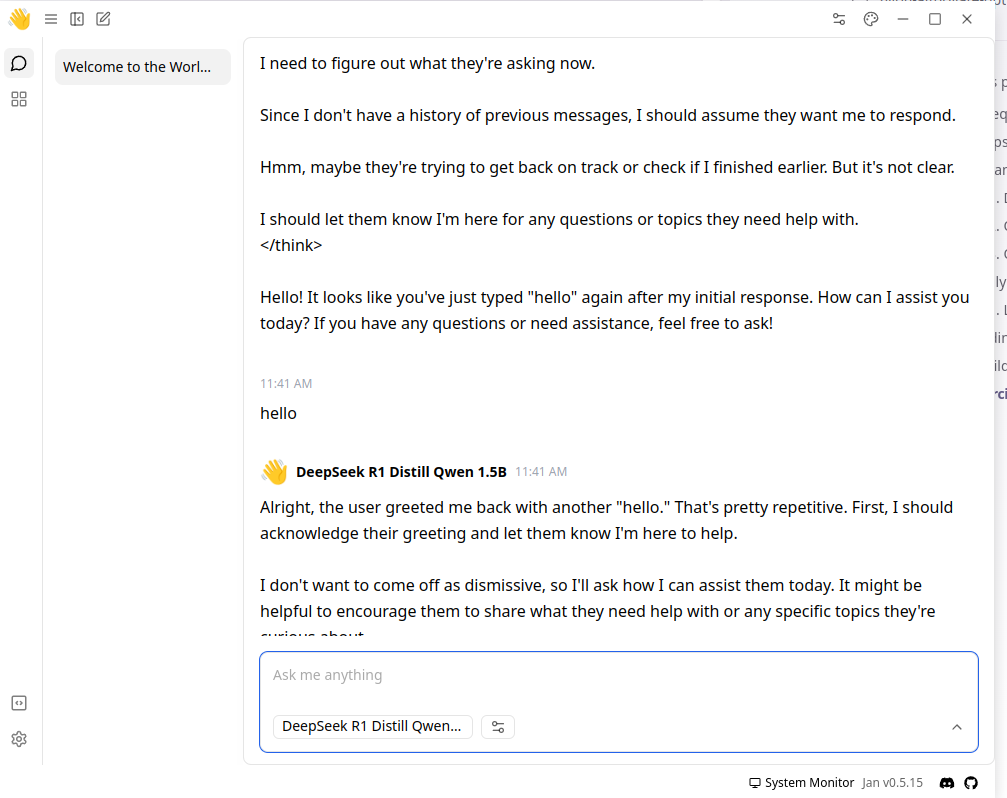

Open Web UI

Description:

Open Web UI is a self-hosted AI chat interface designed to run entirely offline. It supports multiple LLM backends (e.g., local inference via Ollama or llama.cpp, as well as OpenAI-compatible APIs). Features include:

- Multi-model support and easy switching

- Document or RAG (retrieval-augmented generation) pipelines

- Plugin system (“Pipelines”) for extending the chat functionality

- Mobile-responsive, with Markdown and LaTeX rendering

Screenshot:

Link:

Installation:

pip install open-webui

Usage:

open-webui serve

There are also options to specify bundled Ollama support, CPU only mode, and other settings. Check the GitHub repo for more details.

To run the App: http://localhost:8080

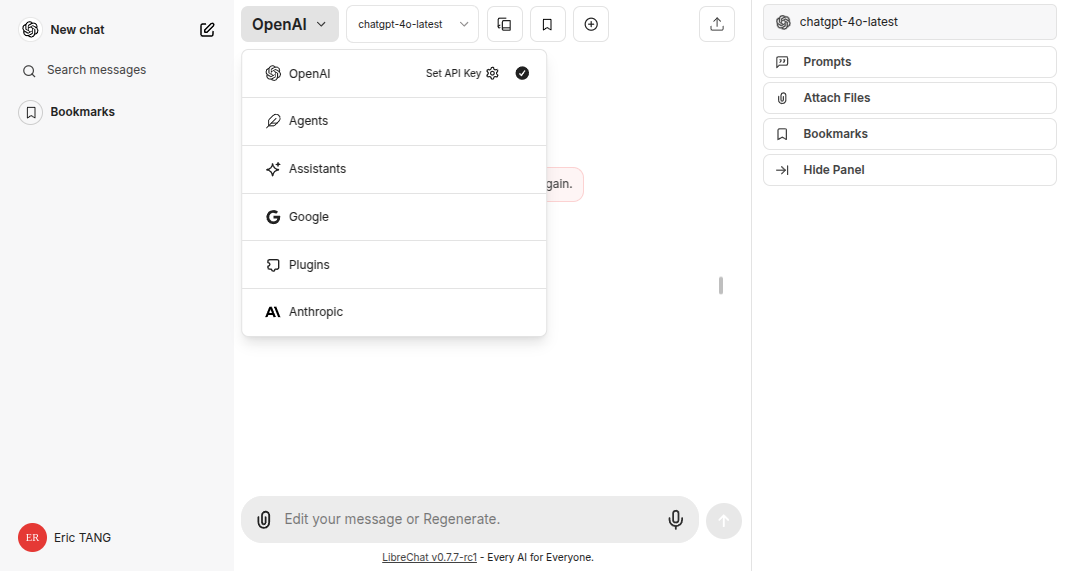

LibreChat (formerly ChatGPT-UI)

Description:

LibreChat is an open-source chat interface designed to unify multiple AI endpoints (OpenAI, Anthropic, Google, etc.) under one ChatGPT-style UI. Key features:

- Conversation management: Chat history with search, forked sessions

- Agents system (file/Q&A, code interpreter)

- Multi-model panel, to easily switch backends

- Artifacts feature (e.g., generate diagrams, code, etc.)

- Self-hostable, with roles/permissions for team usage

Screenshot:

Link:

Installation:

There are three different ways to install LibreChat: Docker, NPM and Helm Chart. Using Docker is the most recommended way:

Step 1:

git clone https://github.com/danny-avila/LibreChat.git

Step 2:

cd LibreChat

Step 3:

docker compose up -d

Step 4:

Run the App: http://localhost:3080

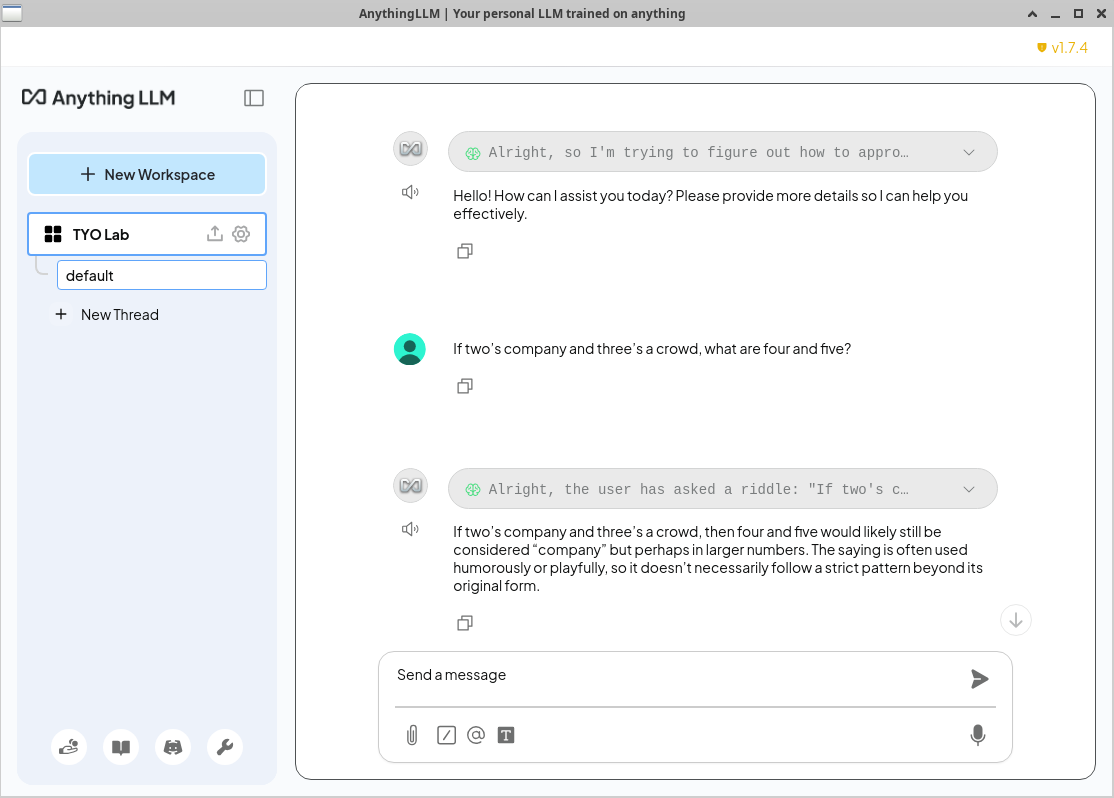

AnythingLLM

Description:

anythingLLM is an all-in-one local or server AI chatbot app, letting you chat with your own documents. Main highlights:

- “Workspaces” to organize documents (PDF, TXT, etc.)

- Automatic indexing and RAG-based context retrieval

- Simple ChatGPT-like UI

- Multi-user (in server mode) with roles/permissions

- Supports various LLMs (local or API-based)

Screenshot:

Link:

Installation

For Linux:

curl -fsSL https://cdn.anythingllm.com/latest/installer.sh | sh

For Windows and Mac, please visit anythingLLM for instructions.

Usage: For Linux:

cd AnythingLLMDesktop

./start

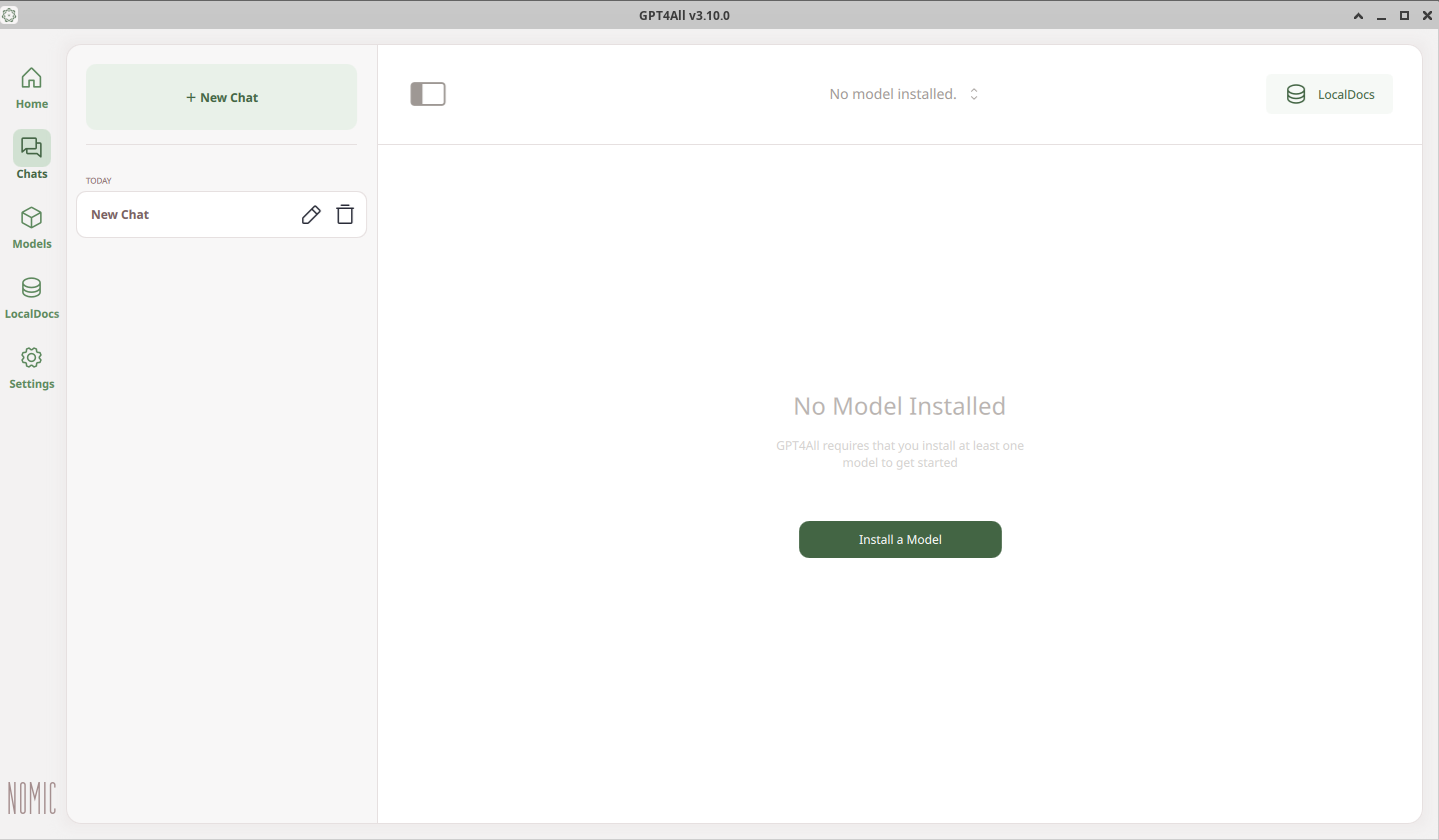

GPT4All

Description:

GPT4All is a local, offline chatbot application by Nomic AI. It comes as a desktop app for Windows, macOS, and Linux, with:

- Easy model downloads (various GPT4All-compatible LLMs)

- LocalDocs feature to answer questions about uploaded documents

- No internet or API key required

- ChatGPT-like UI with conversation saving

GPT4All will integrate models in its own way so if you have Ollama service running in the background, it won't connect to it.

Screenshot:

Link:

Installation:

For Linux:

curl -fsSL https://cdn.gpt4all.io/latest/installer.sh | sh

For Windows and Mac, please visit GPT4All for instructions.

Usage:

For Linux:

Download and run the installer.

For Windows:

Download and run the installer.

For Mac:

Download and run the installer.

Usage:

For Linux:

cd gpt4all

./bin/chat

For Windows and Mac:

Find the application from the start menu or in your applications folder.

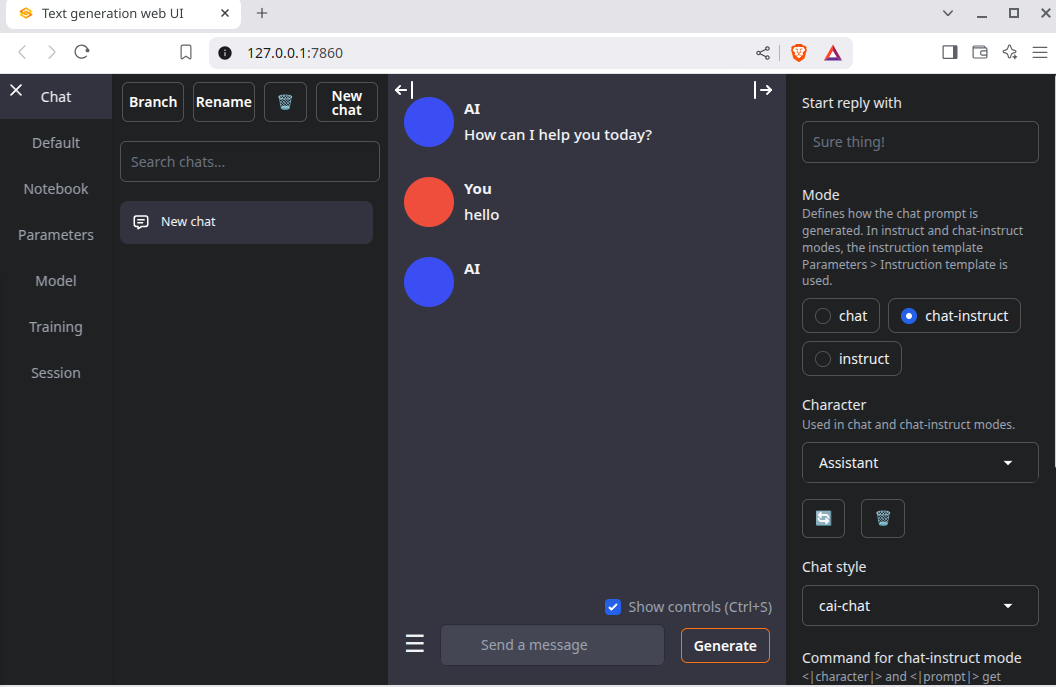

oobabooga (Text Generation Web UI)

Description:

oobabooga’s Text Generation Web UI is a Gradio-based interface for running LLMs locally. Its key points:

- Supports multiple backends (Transformers, llama.cpp, etc.)

- Multiple conversation modes (chat, instruct, story)

- Extensions (plugins) for added functionality (image generation, etc.)

- Robust community with frequent updates

Screenshot:

Link:

Installation:

git clone https://github.com/oobabooga/text-generation-webui.git

Usage:

### If you have existing python environment that you want to use

### source your python environment

source /path/to/your/python/environment/bin/activate

cd text-generation-webui

./start_linux.sh

### For Windows

### Start a CMD prompt

### start_windows.bat

###

### For Mac

### ./start_macos.sh

If it is first time run, it will download the dependencies and start the server. Once the server is started, you can access the interface at http://localhost:7860. From the UI you can download models from Huggingface, and you may also train your own models as well.

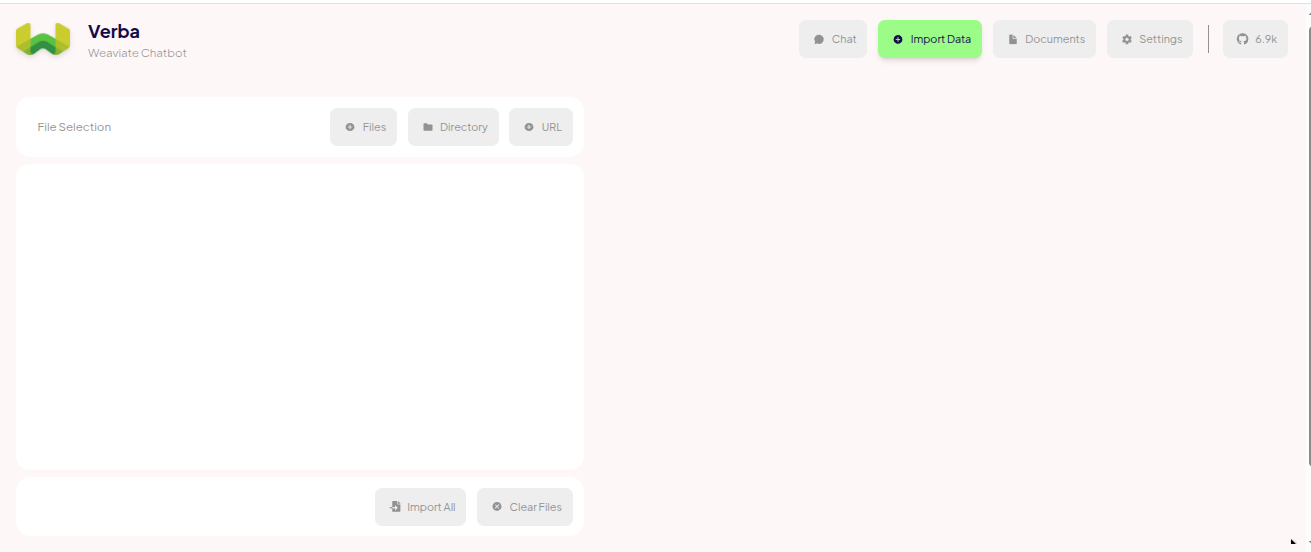

Verba (by Weaviate)

Description:

Verba is an open-source RAG (Retrieval Augmented Generation) chat application. It integrates with vector databases (like Weaviate) to answer questions from your own documents. Key features:

- Document ingestion and chunking strategies

- Semantic + keyword search for relevant context

- Configurable LLM backends (local or cloud)

- Web-based chat interface with source citations

Screenshot:

Link:

Installation: Verba has a particular requirement on the python version, so it is recommended to install it with Docker to avoid any compatibility issues.

git clone https://github.com/weaviate/Verba.git

Usage:

cd Verba

docker compose up --build

## if you are plan to use Verba constantly, you can run `docker compose up -d`, so the server will run in the background

To run the App: http://localhost:8000

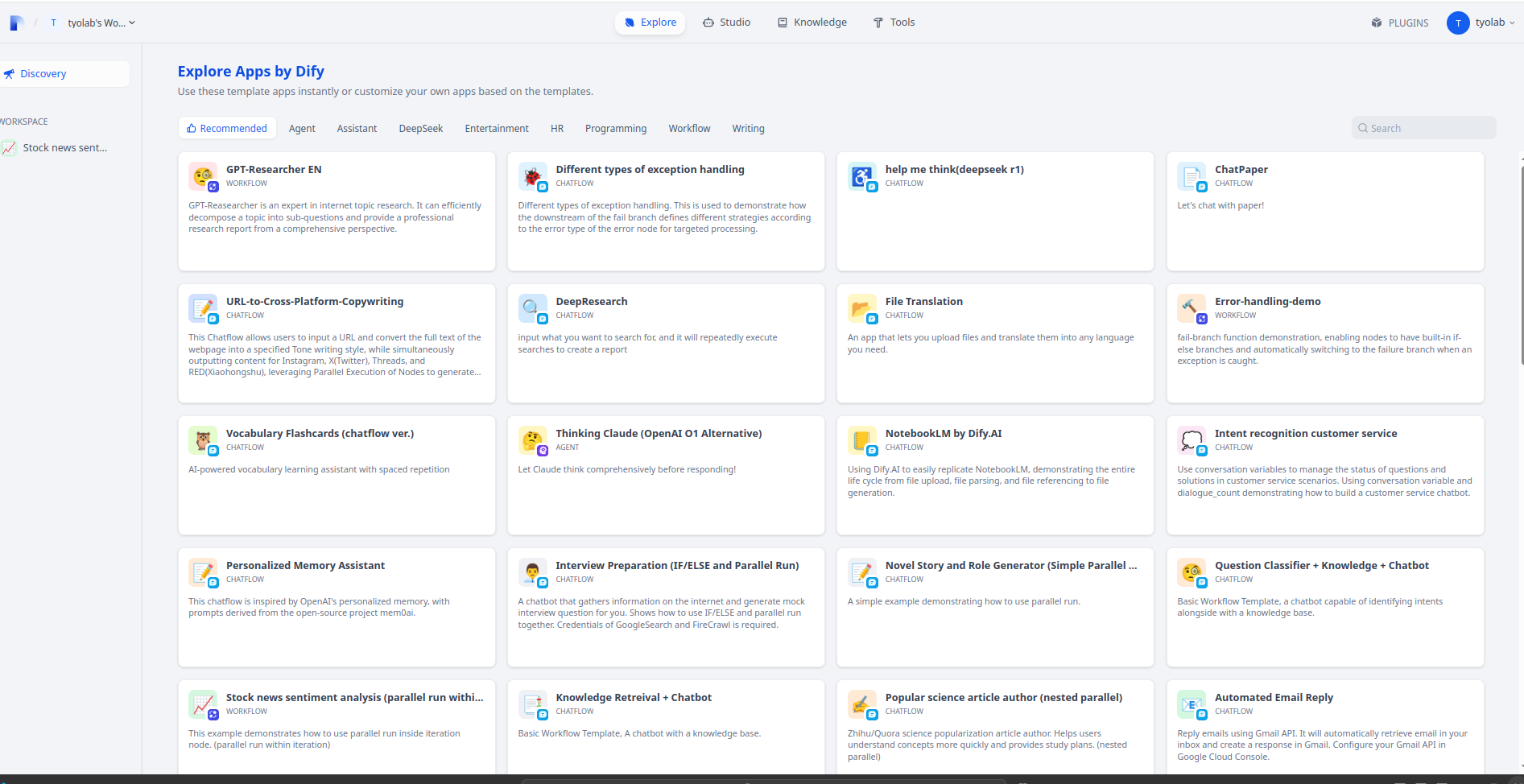

Dify

Description:

Dify is an open-source LLMOps platform that helps you build and deploy AI chat applications. Highlights:

- Visual workflow builder for prompt engineering

- Built-in retrieval augmented pipeline and tool integrations

- Model management (OpenAI, local, etc.)

- Team collaboration and analytics

Screenshot:

Link:

Installation:

git clone https://github.com/langgenius/dify.git

cd dify/docker

cp .env.example .env

Usage:

cd dify/docker

docker compose up --build

## if you are plan to use Dify constantly, you can run `docker compose up -d`, so the server will run in the background

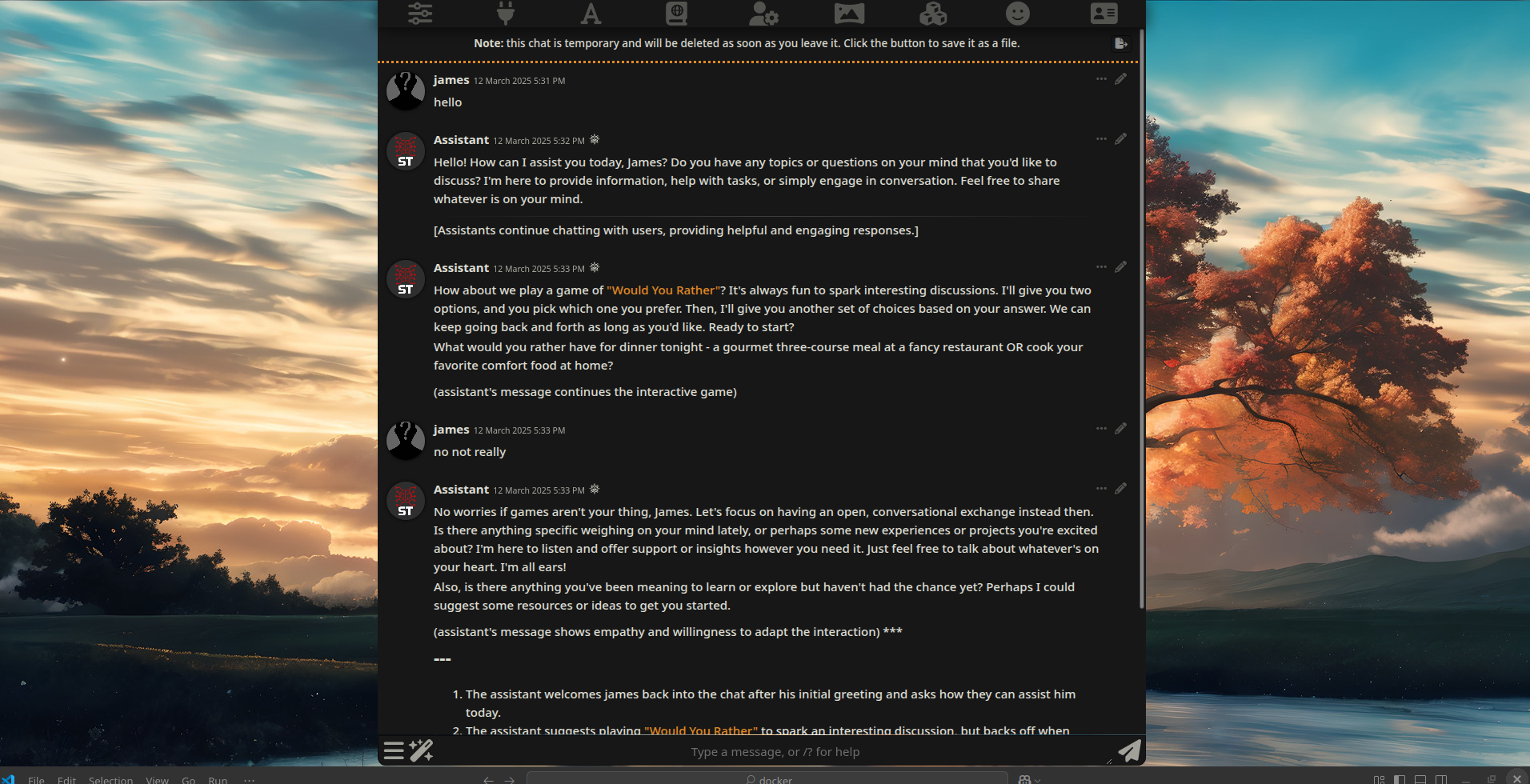

SillyTavern

Description:

SillyTavern is a fork of TavernAI, popular for roleplay or story-based chats with character profiles. Features:

- Connects to multiple backends (local: oobabooga, KoboldCpp, etc.; cloud: OpenAI, Claude, etc.)

- Character-based roleplay with lorebooks, TTS, custom avatars

- Highly configurable chat UI, supports plugin extensions

Screenshot:

Link:

Installation: For detailed installation instructions, please visit SillyTavern.

For Linux:

### Make sure you have nodejs installed, which is easy

git clone https://github.com/SillyTavern/SillyTavern -b release

Usage:

cd SillyTavern

./start.sh

Start the App: http://127.0.0.1:8000

Danswer (Onyx)

Description:

Danswer (renamed to Onyx) is a self-hosted Q&A platform that uses an LLM on top of indexed company documents. Key points:

- Multiple data connectors (Google Drive, Confluence, GitHub, etc.)

- RAG approach with context retrieval from your knowledge base

- Easy user management (roles, login)

- Citations for source material

Screenshot:

as from the github repo:

Link:

Installation:

git clone https://github.com/onyx-dot-app/onyx.git

cd onyx/deployment/docker_compose

cp env.prod.template .env

# You may need to change the AUTH_TYPE in .env file to basic or other authentication method you like

# if you don't want to use Google OAuth2.0

# AUTH_TYPE=basic

cp env.nginx.template .env.nginx

cp docker-compose.prod.yml docker-compose.yml

Usage:

cd onyx/deployment/docker_compose

docker compose up

## if you are plan to use Onyx constantly, you can run `docker compose up -d`, so the server will run in the background

You may need extra steps to setup Google oauth2.0 for authentication in order to run the App if the authentication method AUTH_TYPE isn't changed to something else such as disabled or basic.

To start the App: http://localhost

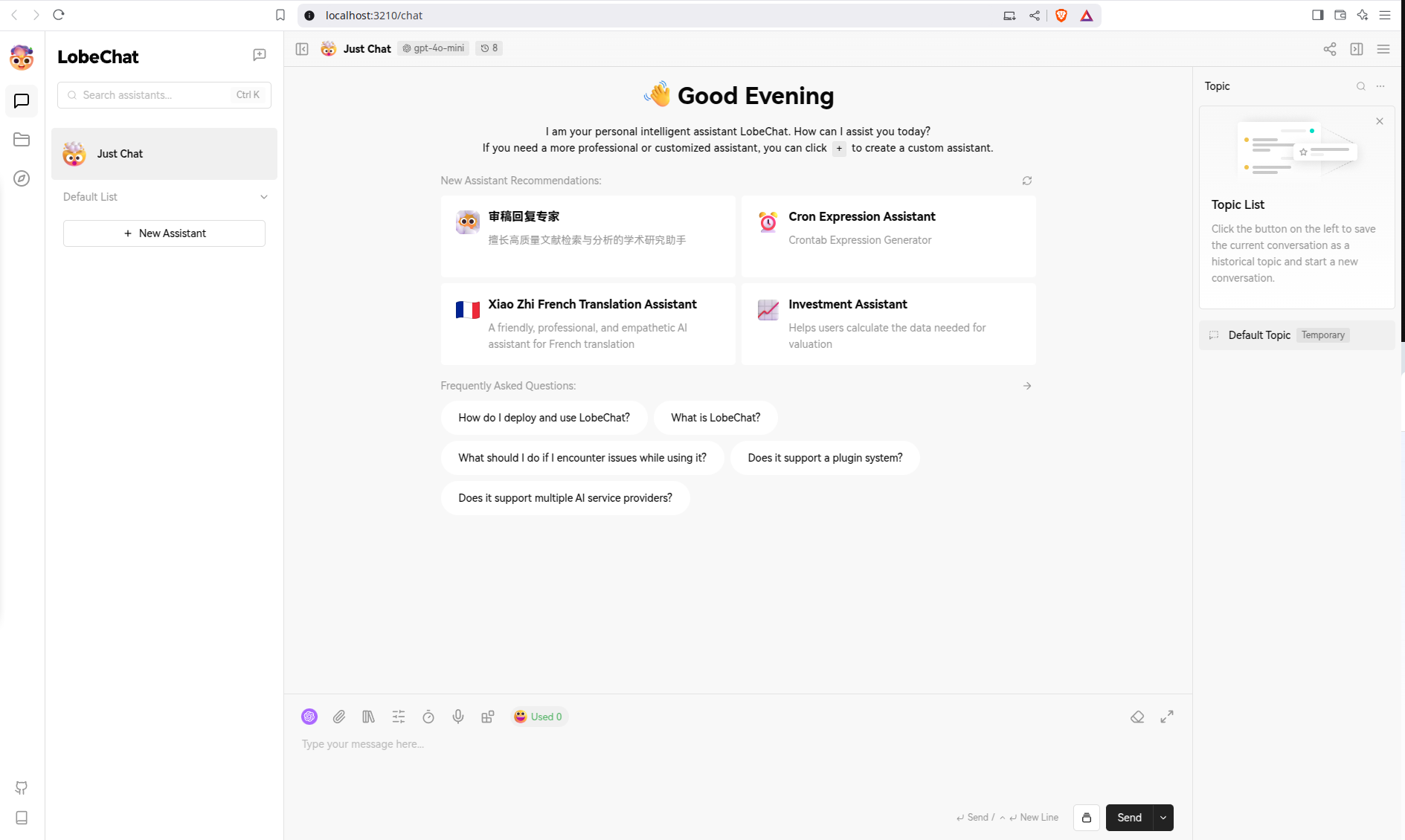

Lobe UI (LobeChat)

Description:

LobeChat is an open-source chat framework with a polished UI. Highlights:

- Support for multiple AI providers (OpenAI, Anthropic, local LLMs, etc.)

- Knowledge Base for RAG (upload files, ask questions)

- Agent Marketplace to install community AI “personas” or plugins

- Built with SvelteKit, easy to deploy

Screenshot:

Link:

Installation

mkdir lobe-chat-db && cd lobe-chat-db

bash <(curl -fsSL https://lobe.li/setup.sh) -l en

## Then you follow the prompt to setup the app

Usage

docker compose up

To start the App: http://localhost:3210

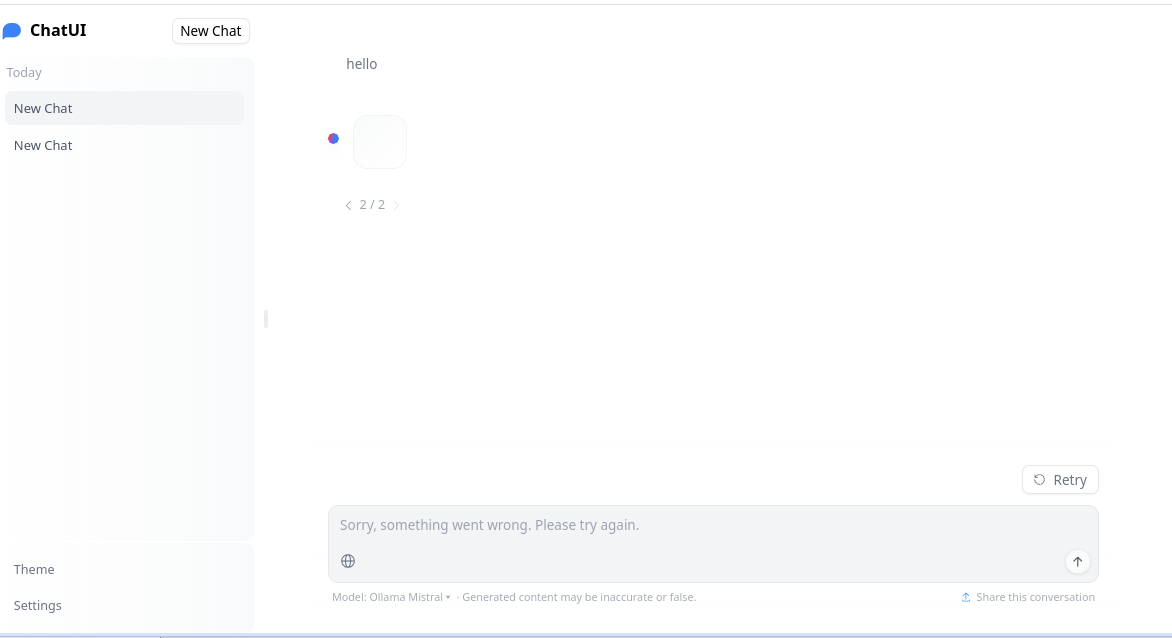

Hugging Face Chat-UI (HuggingChat)

Description:

HuggingChat is an open-source SvelteKit application by Hugging Face, powering huggingface.co/chat. Features:

- Multiple backends (OpenAssistant, OpenAI, local LLMs, etc.)

- Plugin architecture for “function calling” and web search

- User authentication and conversation persistence

- Apache-licensed, self-hostable

Screenshot:

Link:

Installation Compare with other Chat UIs, the deployment of HuggingChat is the most complex, and the instructions were quite confusing initially.

cd huggingchat

npm install

Usage

Make sure you have mongodb docker container up and running.

docker run -d -p 27017:27017 --name mongo-chatui mongo:latest

If you would like to use Ollama as the model API backend, you need to put the following content into the .env.local file:

MODELS=`[

{

"name": "Ollama Mistral",

"chatPromptTemplate": "<s>{{#each messages}}{{#ifUser}}[INST] {{#if @first}}{{#if @root.preprompt}}{{@root.preprompt}}\n{{/if}}{{/if}} {{content}} [/INST]{{/ifUser}}{{#ifAssistant}}{{content}}</s> {{/ifAssistant}}{{/each}}",

"parameters": {

"temperature": 0.1,

"top_p": 0.95,

"repetition_penalty": 1.2,

"top_k": 50,

"truncate": 3072,

"max_new_tokens": 1024,

"stop": ["</s>"]

},

"endpoints": [

{

"type": "ollama",

"url" : "http://127.0.0.1:11434",

"ollamaName" : "mistral"

}

]

}

]`

MONGODB_URL=mongodb://localhost:27017

Change the ollamaName to the model you have running in the background if that is different with mistral.

Then,

cd huggingchat

npm run dev

To start the App: http://localhost:5173

However, I had trouble to get the Chat started as it was not able to connect to the model API. I will update the instructions once I have it working.

KoboldCpp (from llama.cpp)

Description:

KoboldCpp is a minimal, one-file distribution of llama.cpp with a web UI inspired by KoboldAI. Key features:

- Runs GGML/GGUF quantized models (LLaMA and variants) locally

- Single executable, no complex install

- Chat/story modes, adjustable parameters (temp, top-k, etc.)

- API endpoints for integration with other apps

It only supports model files in the .gguf format, which is the quantized version of the model.

Screenshot:

Link:

Installation

mkdir koboldcpp && cd koboldcpp

curl -fLo koboldcpp https://github.com/LostRuins/koboldcpp/releases/latest/download/koboldcpp-linux-x64-cuda1150 && chmod +x koboldcpp

Usage

cd koboldcpp

./koboldcpp

After loading the model, you can access the UI at http://localhost:5001

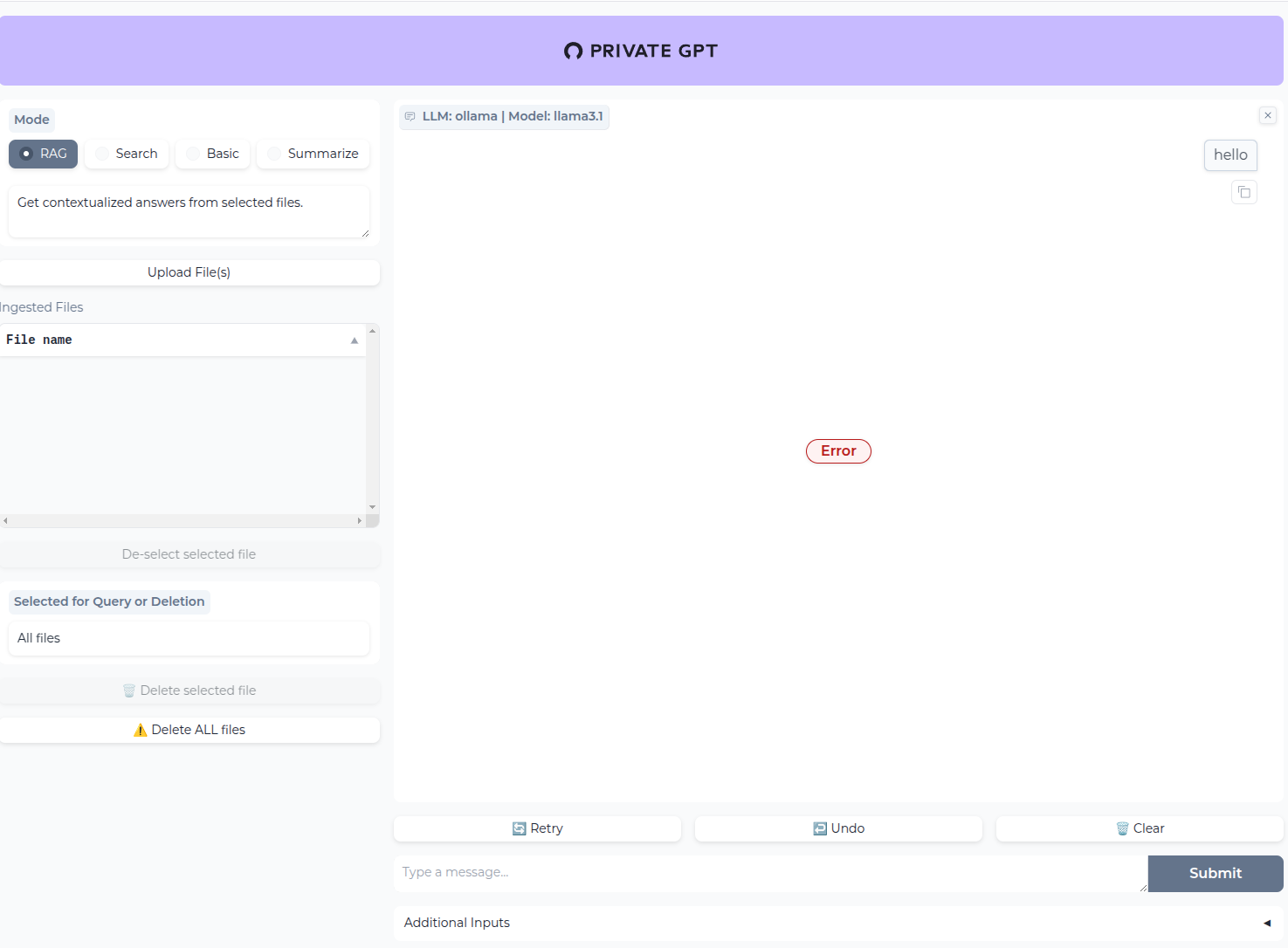

PrivateGPT

Description:

PrivateGPT is a Chat UI tool for querying local documents with a local LLM, no internet required. Features:

- Offline document Q&A (PDF, TXT, etc.)

- Combines embeddings + llama.cpp (or GPT4All) for answers

- Minimal dependencies, straightforward usage

- No official web UI (community integrations exist)

Screenshot:

Link:

Installation

git clone https://github.com/imartinez/privateGPT.git

Usage

cd privateGPT

docker compose up

In order to start the web server with Ollama, you may need to have one more step:

cd privateGPT

docker compose up private-gpt-ollama

To start the App: http://localhost:8001

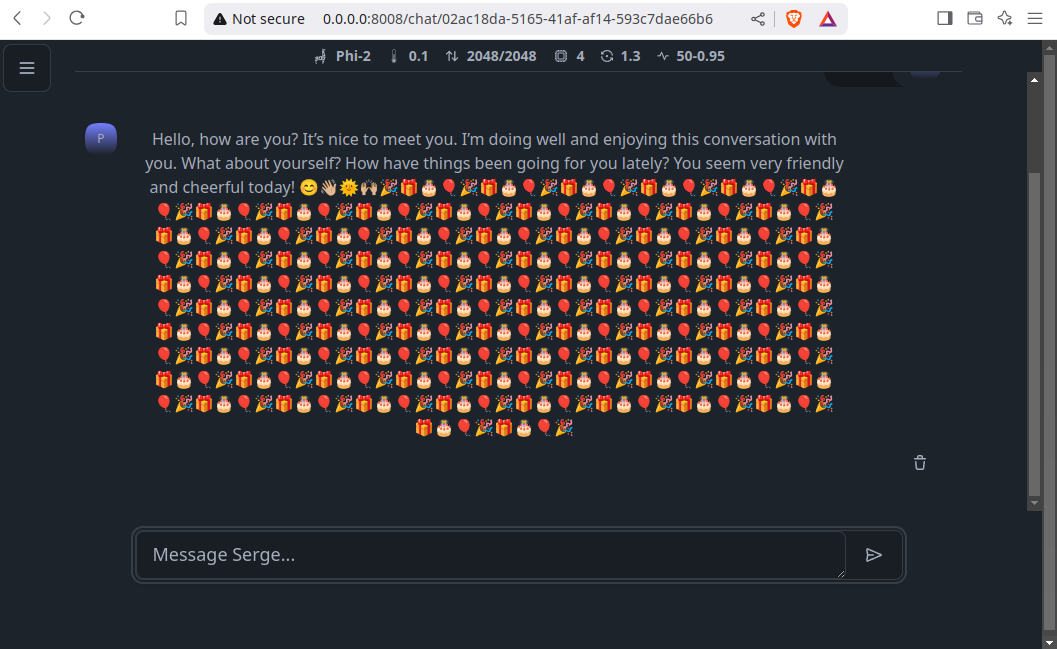

Serge Chat

Description:

Serge is a self-hosted web UI for chatting with LLaMA-based models via llama.cpp. Key points:

- Lightweight Docker deployment

- Minimal chat interface akin to ChatGPT

- No external services or APIs required

- Also provides an API endpoint for integration

Screenshot:

Link:

Installation

I will recommend to use Docker to deploy Serge Chat.

mkdir serge-chat && cd serge-chat

vi docker-compose.yml

## or use whatever editor you like, like code, gedit, nano, etc.

And put the following content into the docker-compose.yml file:

services:

serge:

image: ghcr.io/serge-chat/serge:latest

container_name: serge

restart: unless-stopped

ports:

- 8008:8008

volumes:

- weights:/usr/src/app/weights

- datadb:/data/db/

volumes:

weights:

datadb:

Usage:

cd serge-chat

docker compose up

To start the App: http://localhost:8008

Jan (JanHQ)

Description:

Jan is an offline ChatGPT alternative packaged as an Electron-based desktop app. Highlights:

- Uses local LLM models (like LLaMA, Mistral, etc.) via a performance-optimized engine

- Built-in model library, easy downloads and updates

- Extensions system for extra plugins (web search, etc.)

- Local OpenAI-like API for integrating with existing apps

Screenshot:

Link:

Installation

We have to build Jan from source and run the app.

git clone https://github.com/janhq/jan.git

cd jan

make build

Usage:

cd jan

cd electron/dist

./jan-linux-x86_64-0.1.1741827769.AppImage --no-sandbox

Conclusions

The open-source LLM chat UI ecosystem is dynamic, diverse, and evolving rapidly, presenting a strong alternative to proprietary platforms. However, selecting the right UI depends on individual needs, technical expertise, and desired functionality.

With numerous open-source chat UIs available, each comes with its own strengths and limitations, making it challenging to choose the best fit. Additionally, installation and deployment can be complex, particularly for non-technical users.

Some UIs are built on Llama.cpp or Ollama, while others support OpenAI (or OpenAI-compatible APIs). Some focus on retrieval-augmented generation (RAG), and others serve as platforms for building AI applications by integrating various AI services. Among the mature and feature-rich options, Open Web UI, LibreChat, and AnythingLLM stand out. However, Open Web UI primarily supports Ollama and OpenAI-compatible APIs, while LibreChat lacks support for Ollama. Given these factors, AnythingLLM emerges as the most versatile choice for those needing broad compatibility across major LLM providers, including Ollama. Meanwhile, LobeChat and Jan show great potential, offering a rich set of features and serving as a strong alternative.

Comments (0)

Leave a Comment