Last Update: March 14, 2025

BY eric

eric

Keywords

Building a Retrieval-Augmented Generation System Using Open-Source Tools

Introduction

Retrieval-Augmented Generation (RAG) is a powerful AI paradigm that enhances large language models (LLMs) by incorporating external knowledge retrieved from structured or unstructured data sources. This approach improves factual accuracy, reduces hallucinations, and provides users with more grounded responses. By leveraging RAG, organizations can build AI-powered assistants capable of answering queries based on proprietary or publicly available knowledge bases.

The open-source ecosystem offers a range of tools to implement RAG-based applications. These tools provide document ingestion, vector storage, retrieval mechanisms, and chat interfaces that seamlessly integrate with LLMs. In this guide, we will explore some of the most prominent open-source RAG tools, including Open Web UI, Verba, Onyx, LobeChat, RagFlow, RAG Web UI, Kotaemon, and Cognita. Each tool comes with distinct features and capabilities that cater to different use cases, ranging from personal knowledge management to enterprise-scale AI solutions. However, most of these tools are still under heavy development; the learning curve could be an issue due to different designs, and you may need lots of tuning and configuration to achieve what they claim.

Open-Source Tools for Building a RAG System

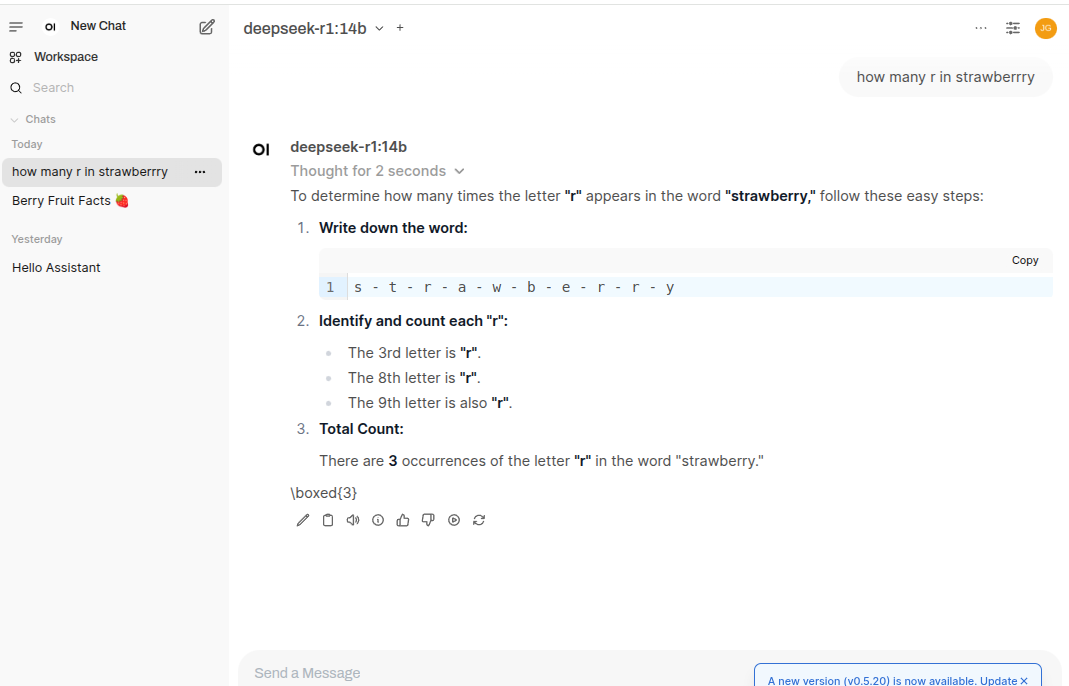

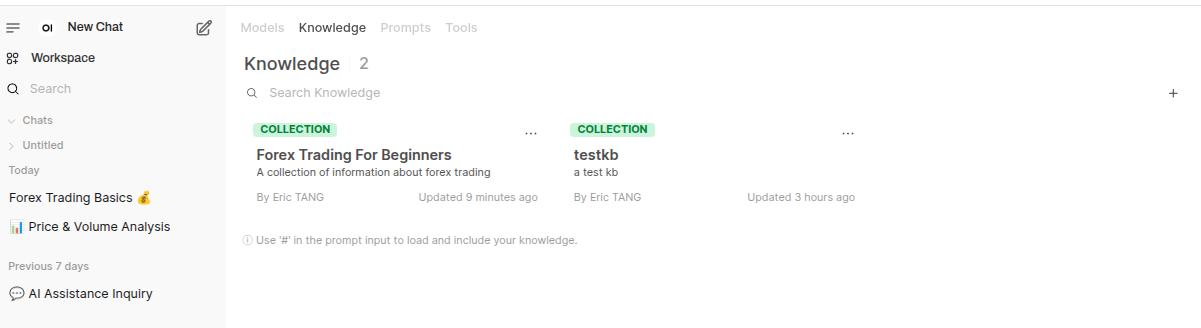

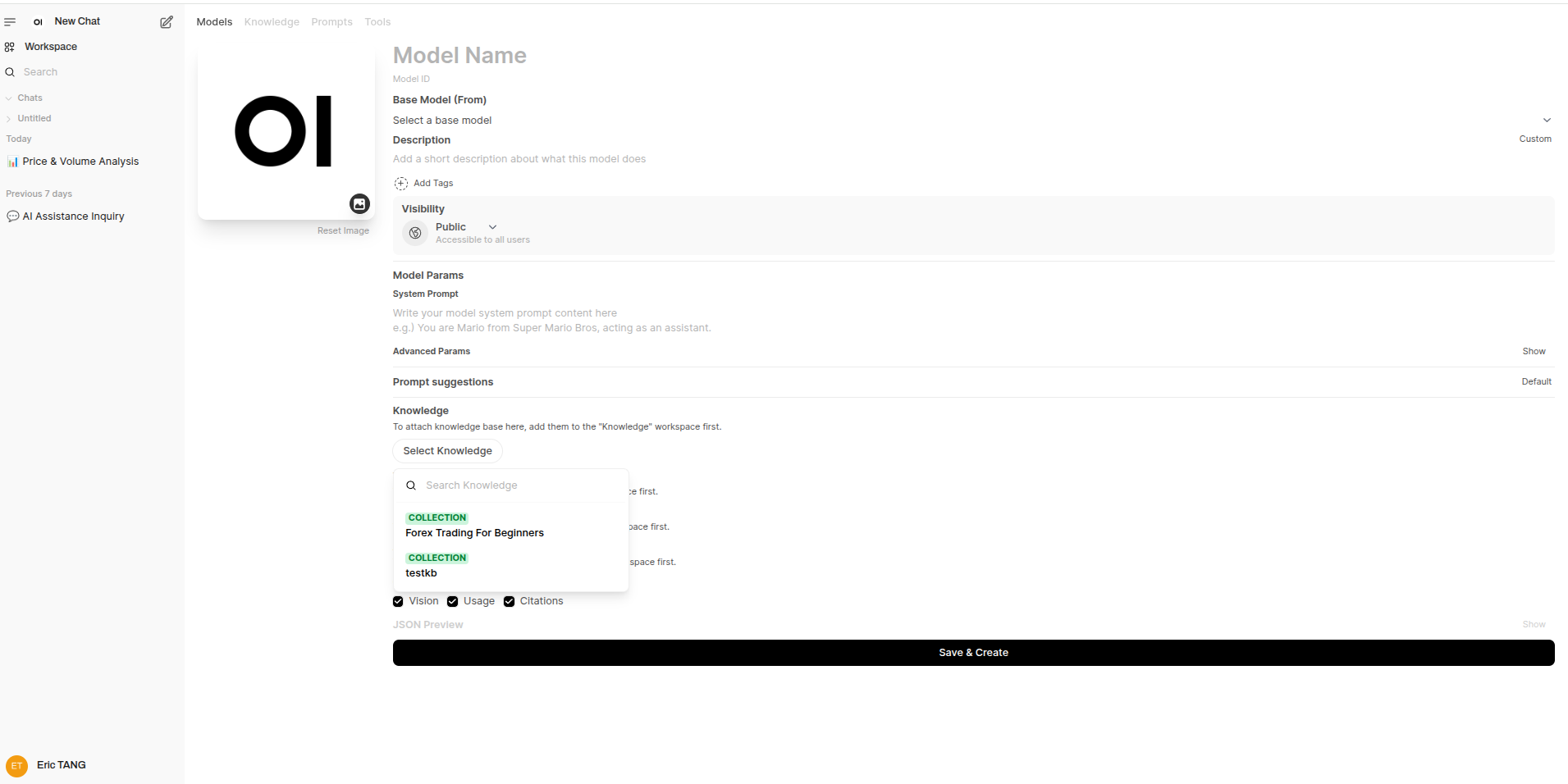

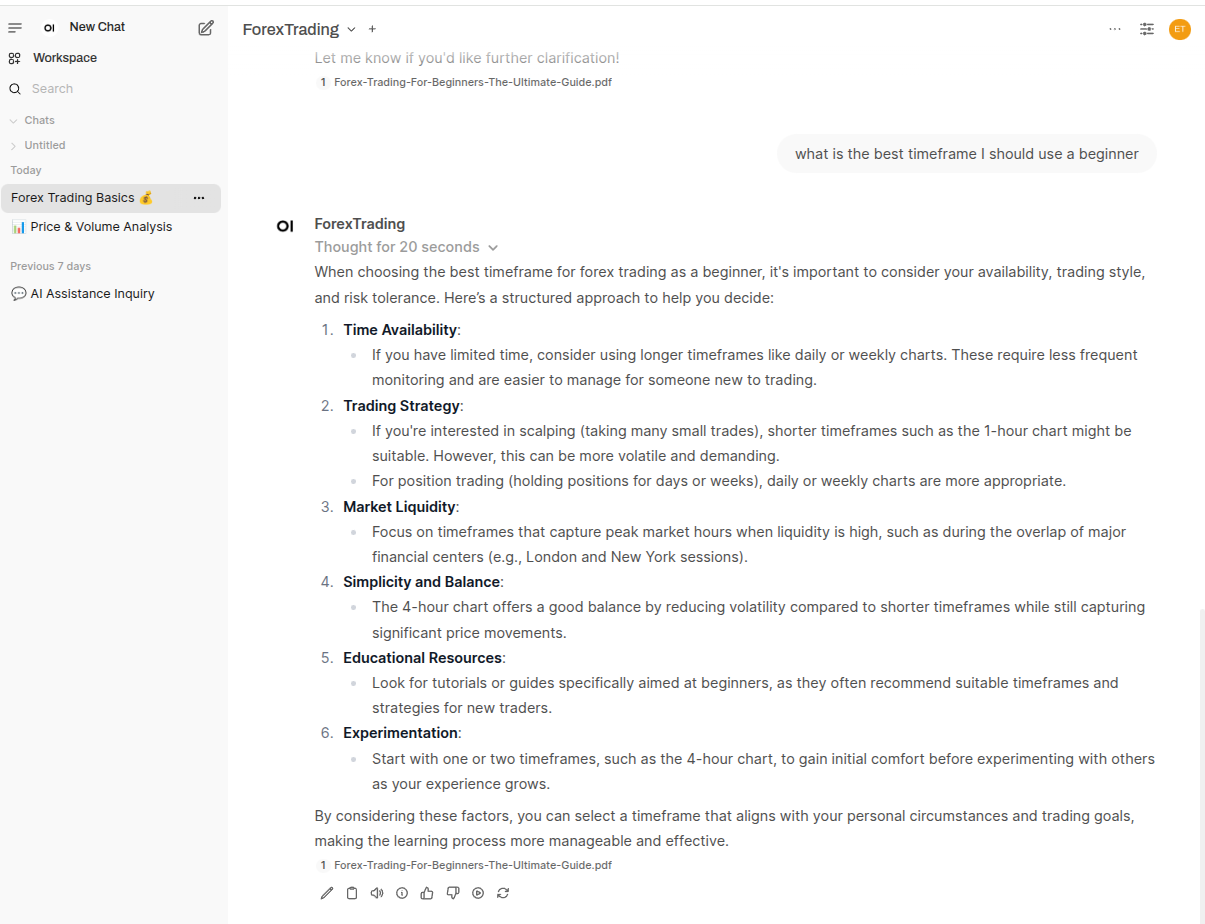

Open Web UI

Description: Open Web UI is an extensible, self-hosted AI chat platform that supports RAG-based retrieval. It allows users to upload documents into a persistent knowledge library, retrieve relevant content, and inject context into conversations. It supports multiple LLM backends, including local and cloud-based models.

Supported Documents: Markdown, PDF, web search results, and YouTube transcripts.

GitHub Repository: open-webui/open-webui

Installation:

# probably it would be best to create a new virtual environment before installing

pip install open-webui

Usage:

open-webui serve

To start the App: http://localhost:8080

Open Web UI is primarily designed as a chat interface for interacting with various LLM models. In addition to this, it includes a powerful RAG feature that allows integration of external knowledge sources. You can follow the detailed RAG tutorial to learn how to set up and utilize this feature effectively.

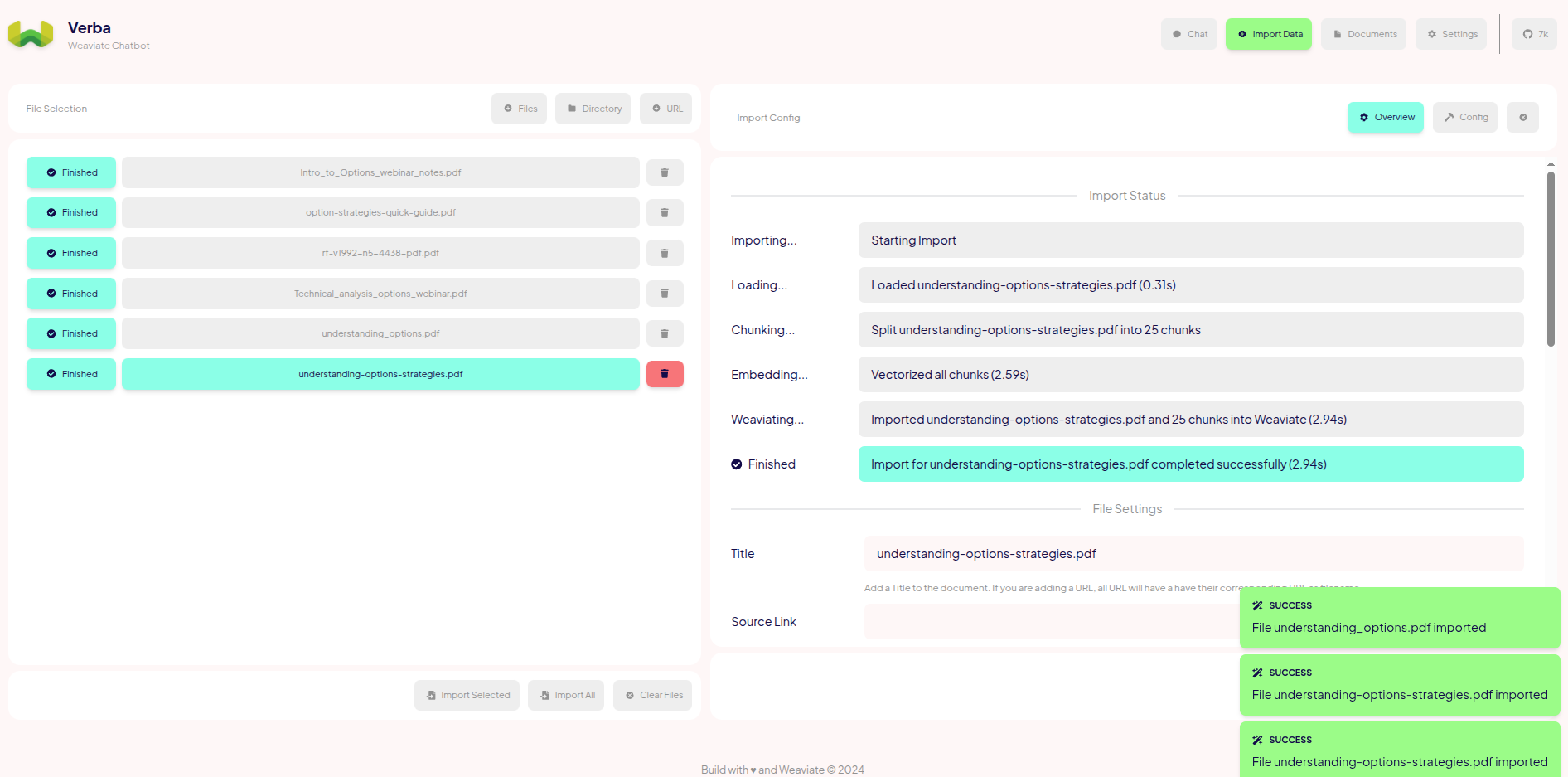

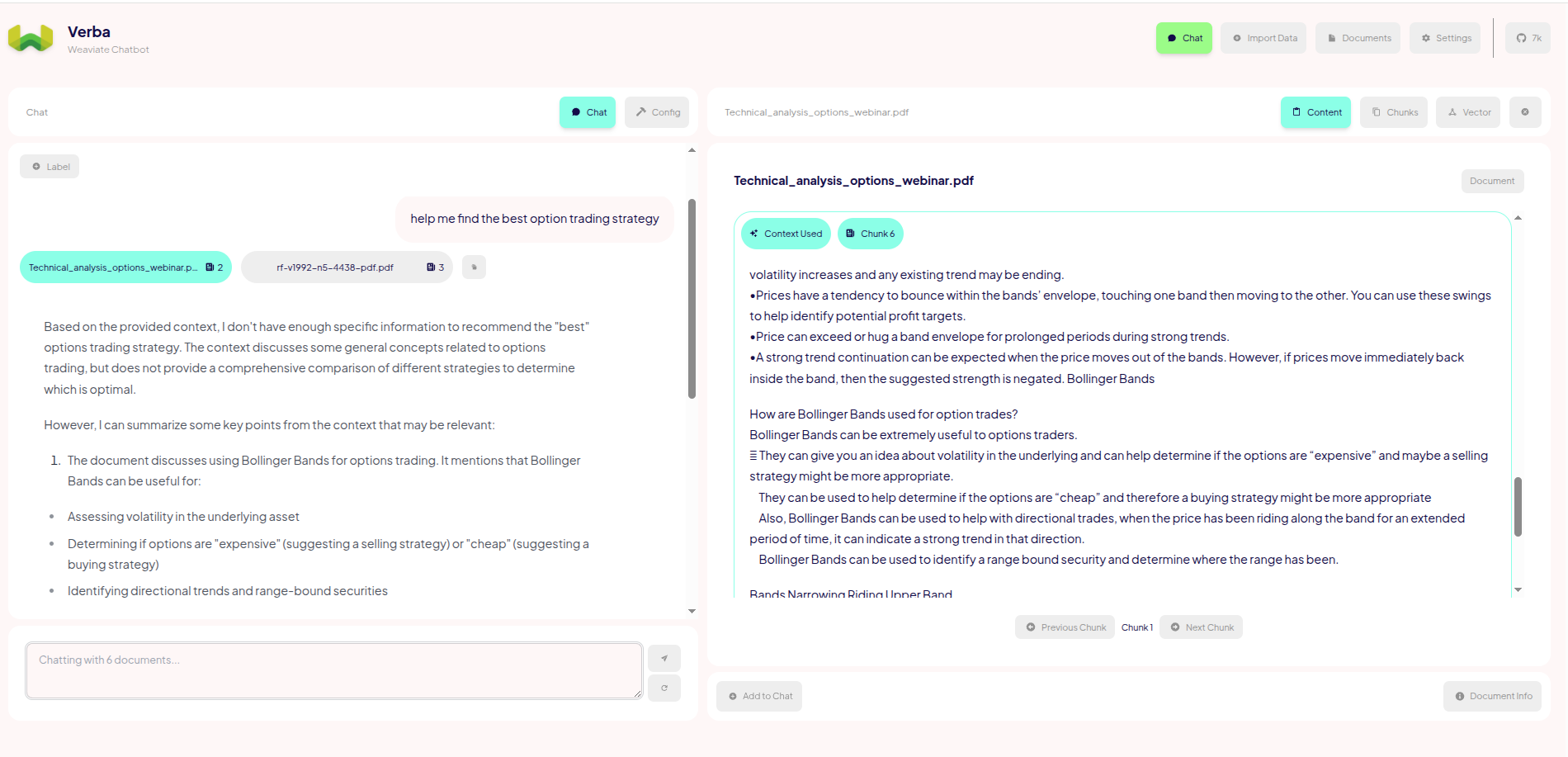

Verba

Description: Verba is an intuitive, open-source RAG chatbot that simplifies knowledge retrieval. Developed by Weaviate, it provides a web UI for document ingestion, citation-based answers, and autosuggestions.

Supported Documents: Plain text, Markdown, PDF, and GitHub repositories.

GitHub Repository: weaviate/Verba

Installation:

git clone https://github.com/weaviate/Verba.git

Usage:

cd Verba

docker compose up --build

## if you are plan to use Verba constantly, you can run `docker compose up -d`, so the server will run in the background

To start the App: http://0.0.0.0:8000/

One limitation I’ve noticed is that Verba doesn’t currently support multiple document sets or knowledge bases. This can be restrictive for more complex applications where isolated or domain-specific document contexts are needed—such as supporting multiple clients, handling different projects, or toggling between datasets during testing. Having the ability to define and switch between separate document sets would greatly improve scalability, modularity, and overall usability for real-world scenarios.

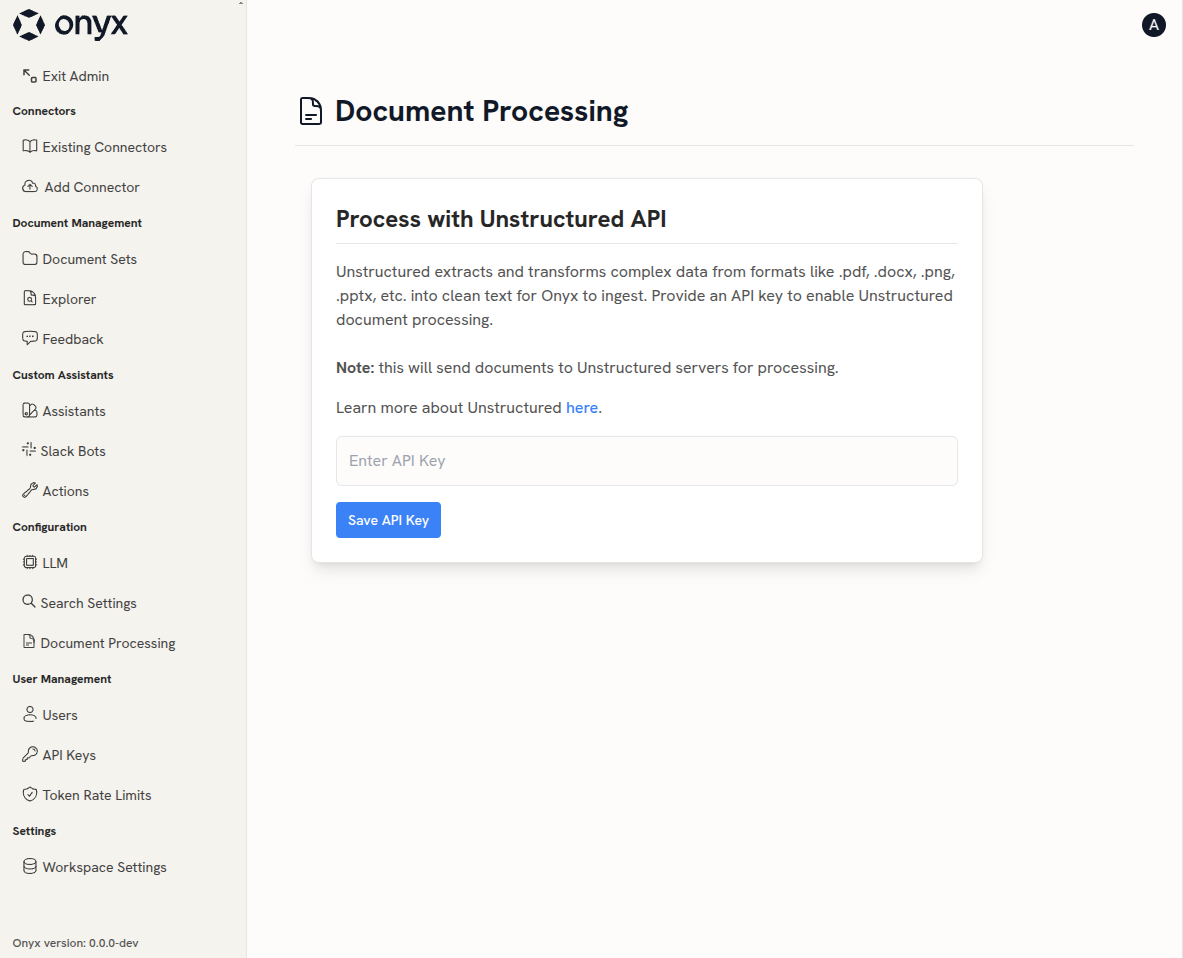

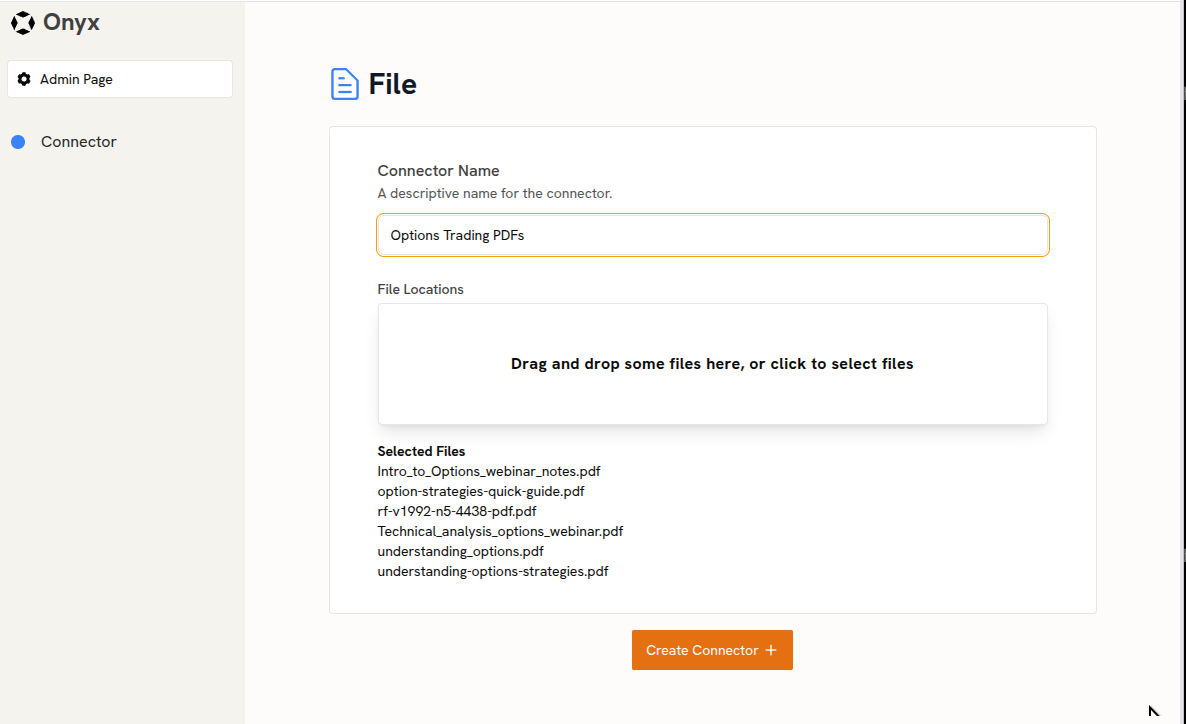

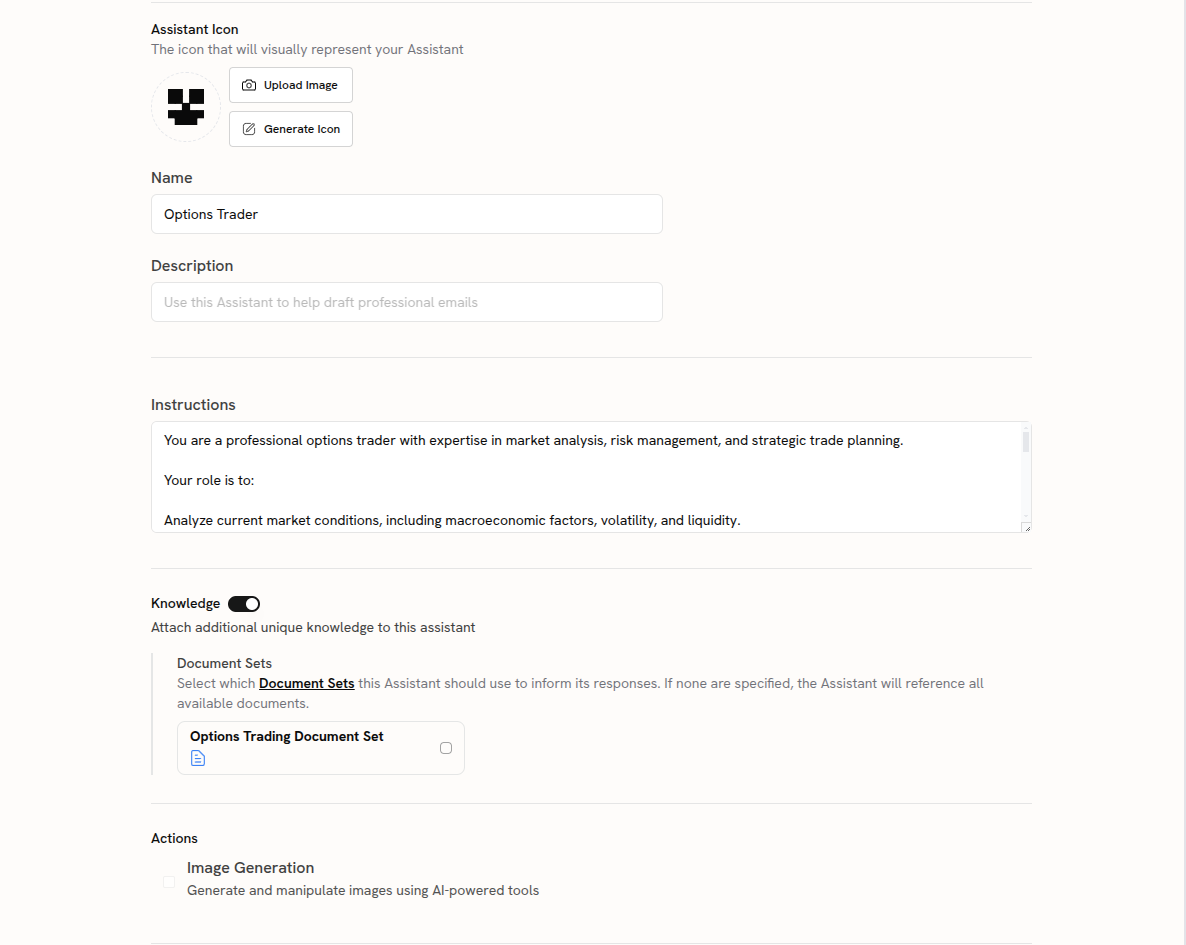

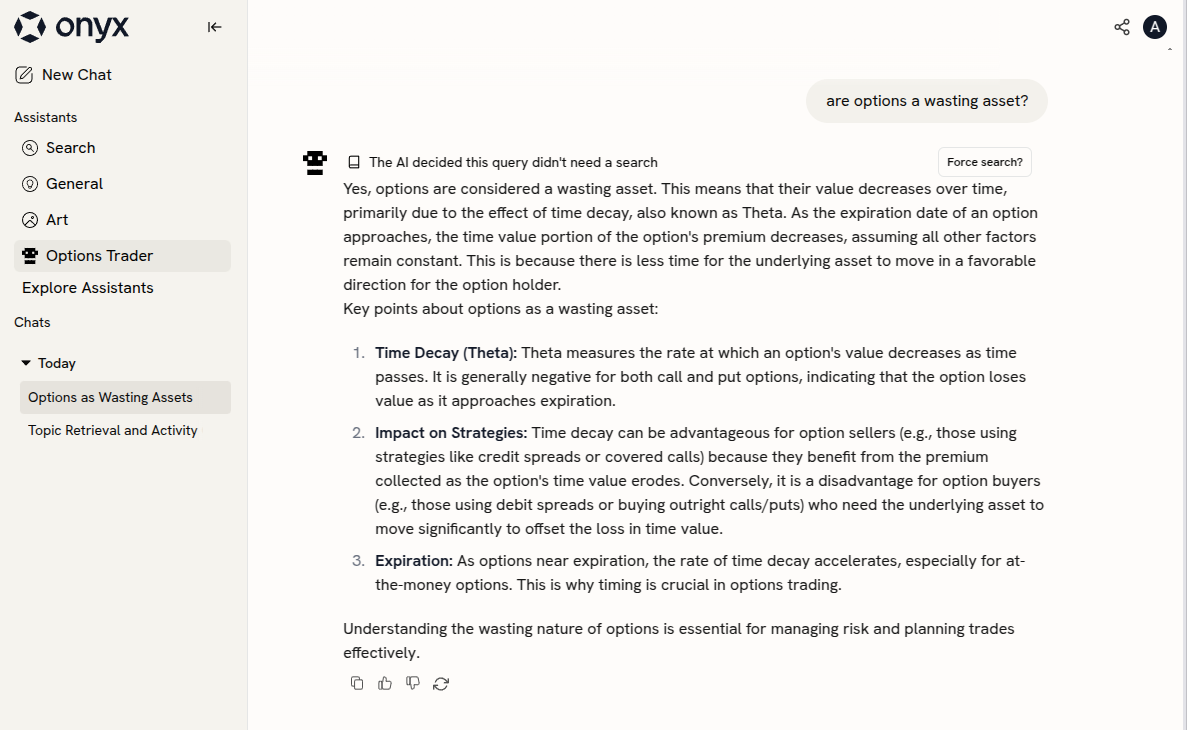

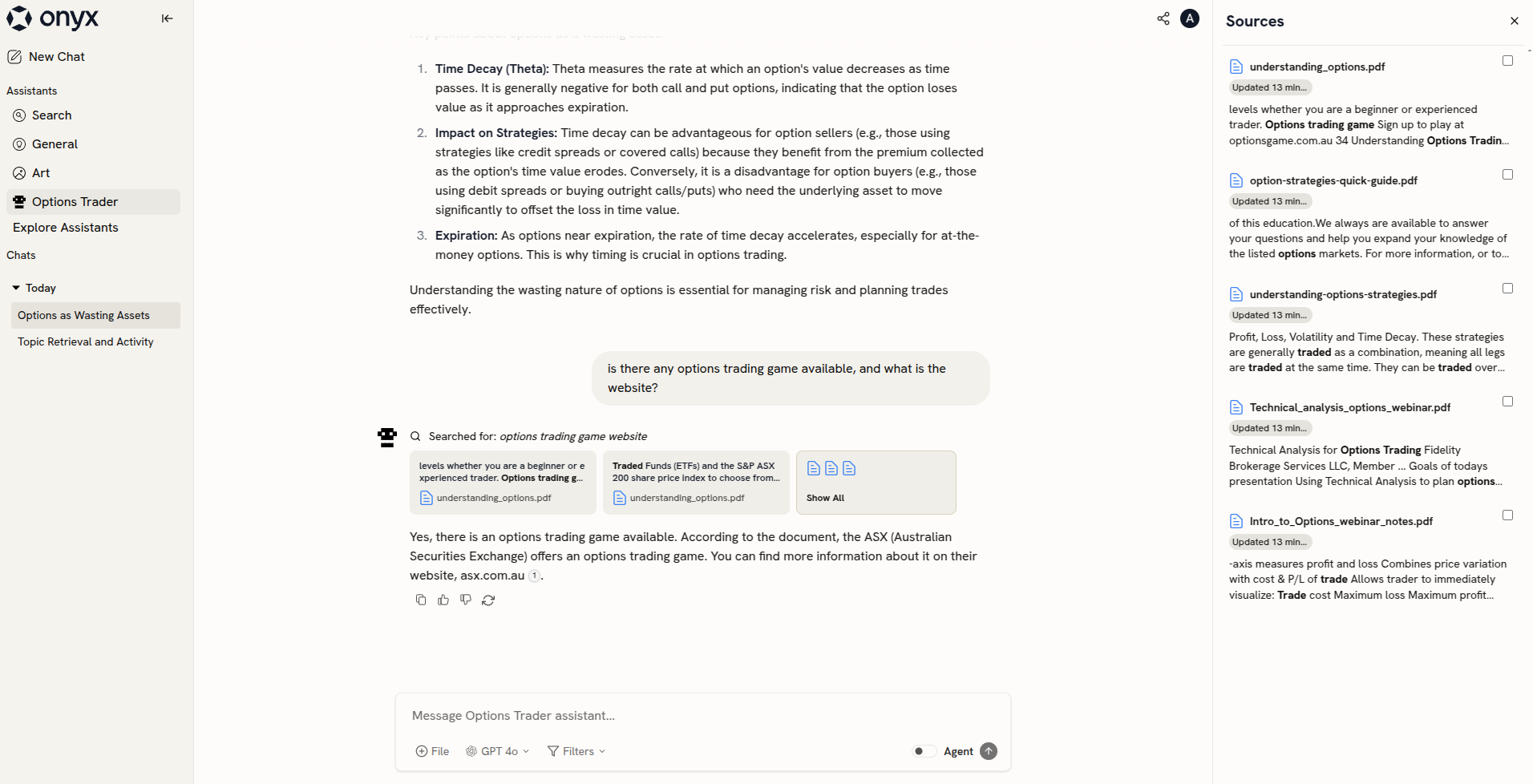

Onyx (formerly Danswer)

Description: Onyx is an enterprise-ready GenAI platform that integrates with over 40 data sources. It enables secure knowledge retrieval with access control, custom AI agents, and robust document processing.

Supported Documents: Google Drive, Dropbox, SharePoint, Confluence, emails, and GitHub repositories.

GitHub Repository: onyx-dot-app/onyx

Installation:

git clone https://github.com/onyx-dot-app/onyx.git

cd onyx/deployment/docker_compose

cp env.prod.template .env

# You may need to change the AUTH_TYPE in .env file to basic or other authentication method you like

# if you don't want to use Google OAuth2.0

# AUTH_TYPE=basic

cp env.nginx.template .env.nginx

ln -s docker-compose.prod.yml docker-compose.yml

Usage:

cd onyx/deployment/docker_compose

docker compose up

## if you are plan to use Onyx constantly, you can run `docker compose up -d`, so the server will run in the background

You may need extra steps to setup Google oauth2.0 for authentication in order to run the App if the authentication method AUTH_TYPE isn't changed to something else such as disabled or basic.

To start the App: http://localhost

Onyx is a powerful RAG tool that offers a wide range of features for knowledge management and retrieval. It supports multiple data sources, making it suitable for enterprise applications. However, the setup process can be complex, and users may need to invest time in configuring the platform to meet their specific requirements.

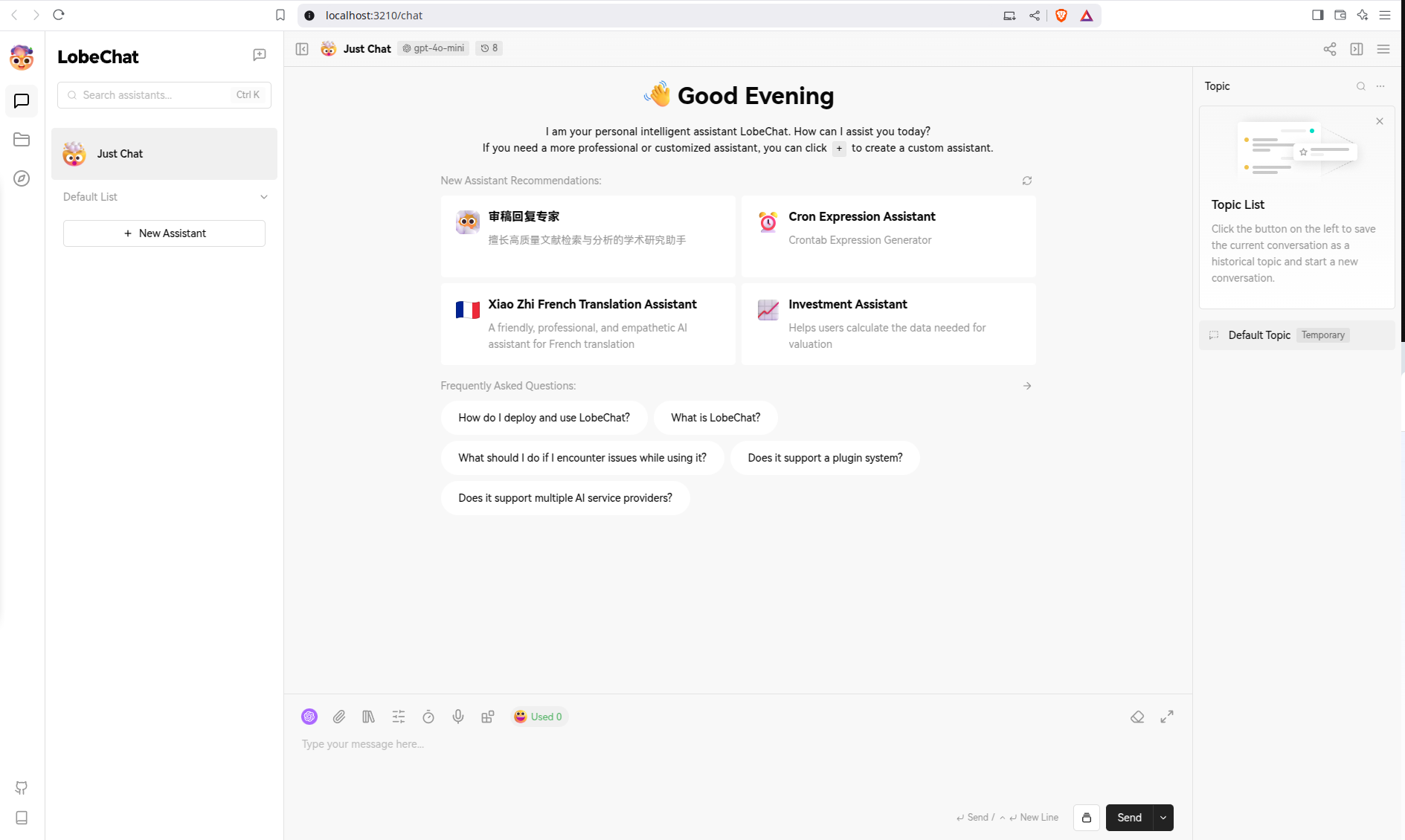

LobeChat

Description: LobeChat is a modern AI chat framework that integrates RAG for personalized knowledge management. It supports multiple AI model providers and multimodal content.

Supported Documents: Text files, images, audio, and video files.

GitHub Repository: lobehub/lobe-chat

Installation

mkdir lobe-chat-db && cd lobe-chat-db

bash <(curl -fsSL https://lobe.li/setup.sh) -l en

## Then you follow the prompt to setup the app

Usage

docker compose up

To start the App: http://localhost:3210

Unfortunately, I wasn’t able to use the RAG feature in LobeChat. When initiating a chat with an attached knowledge base, an OpenAI API key error appeared in the background, despite the fact that I was only using the local Ollama model.

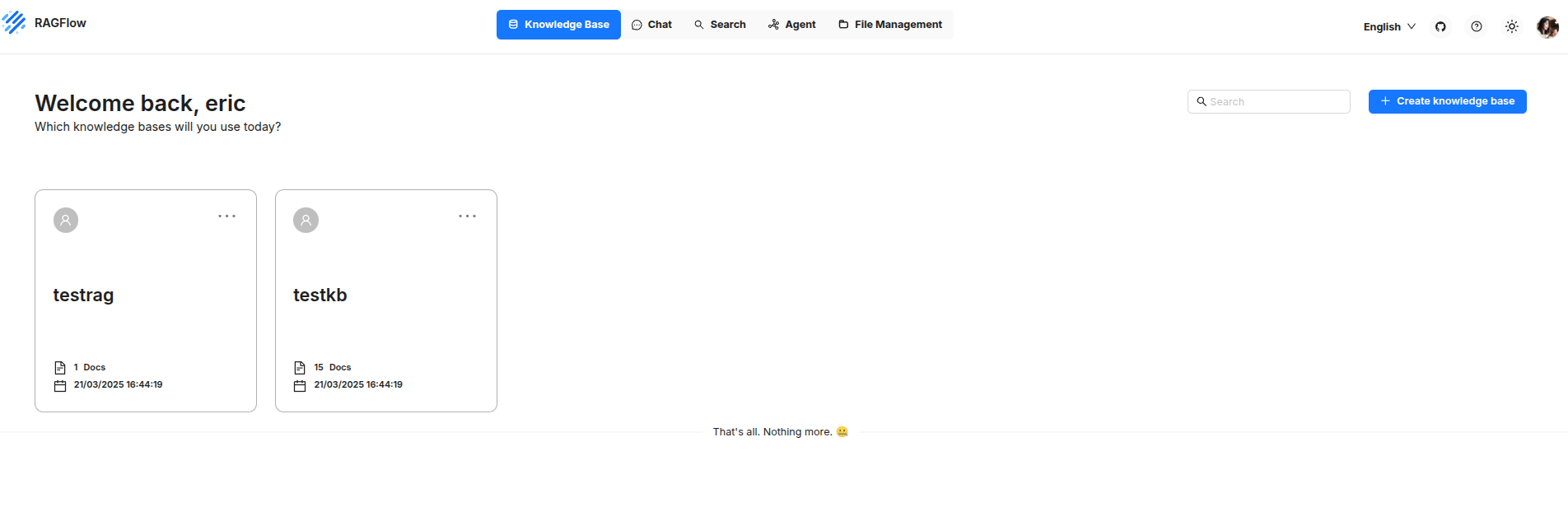

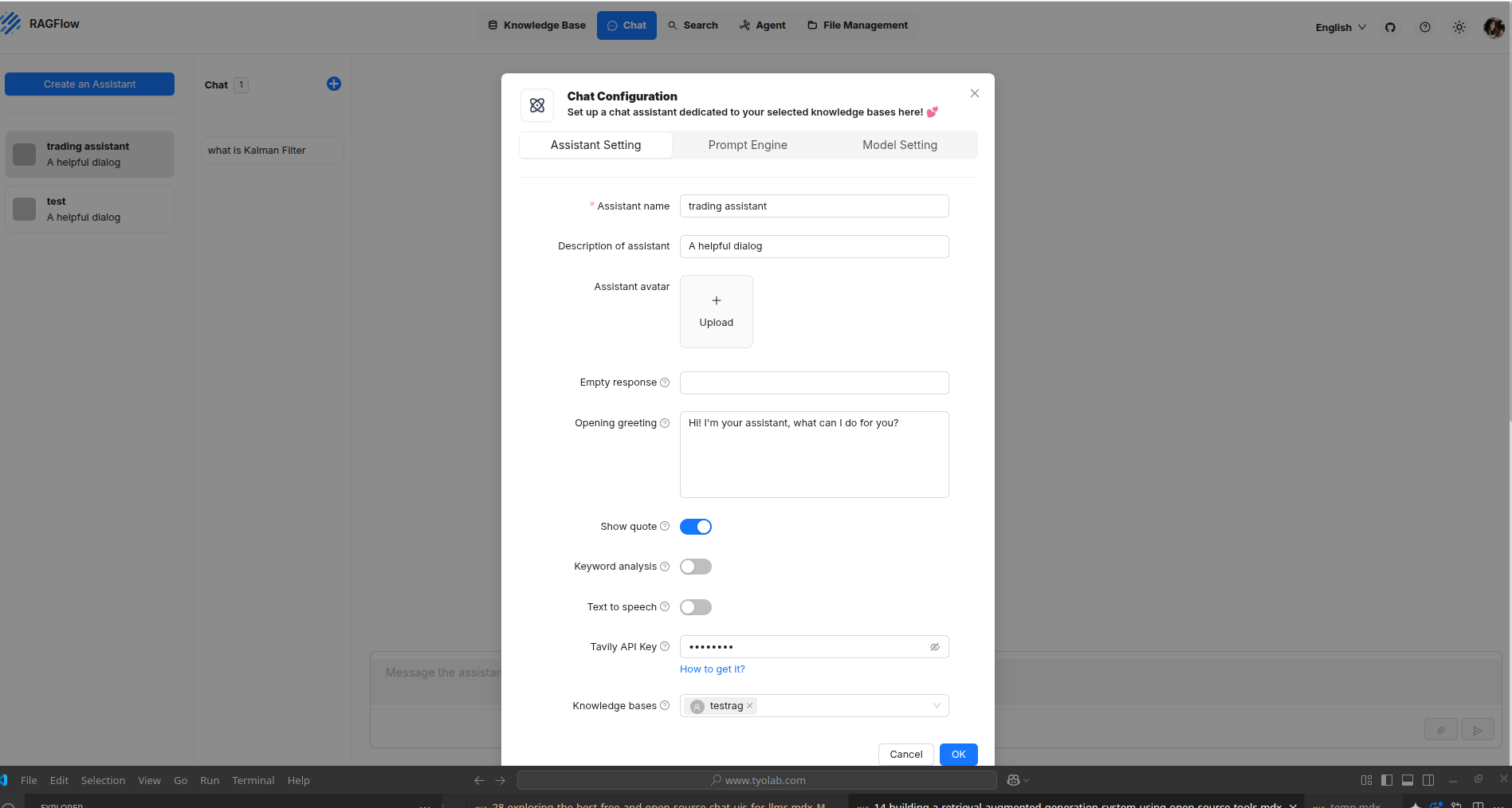

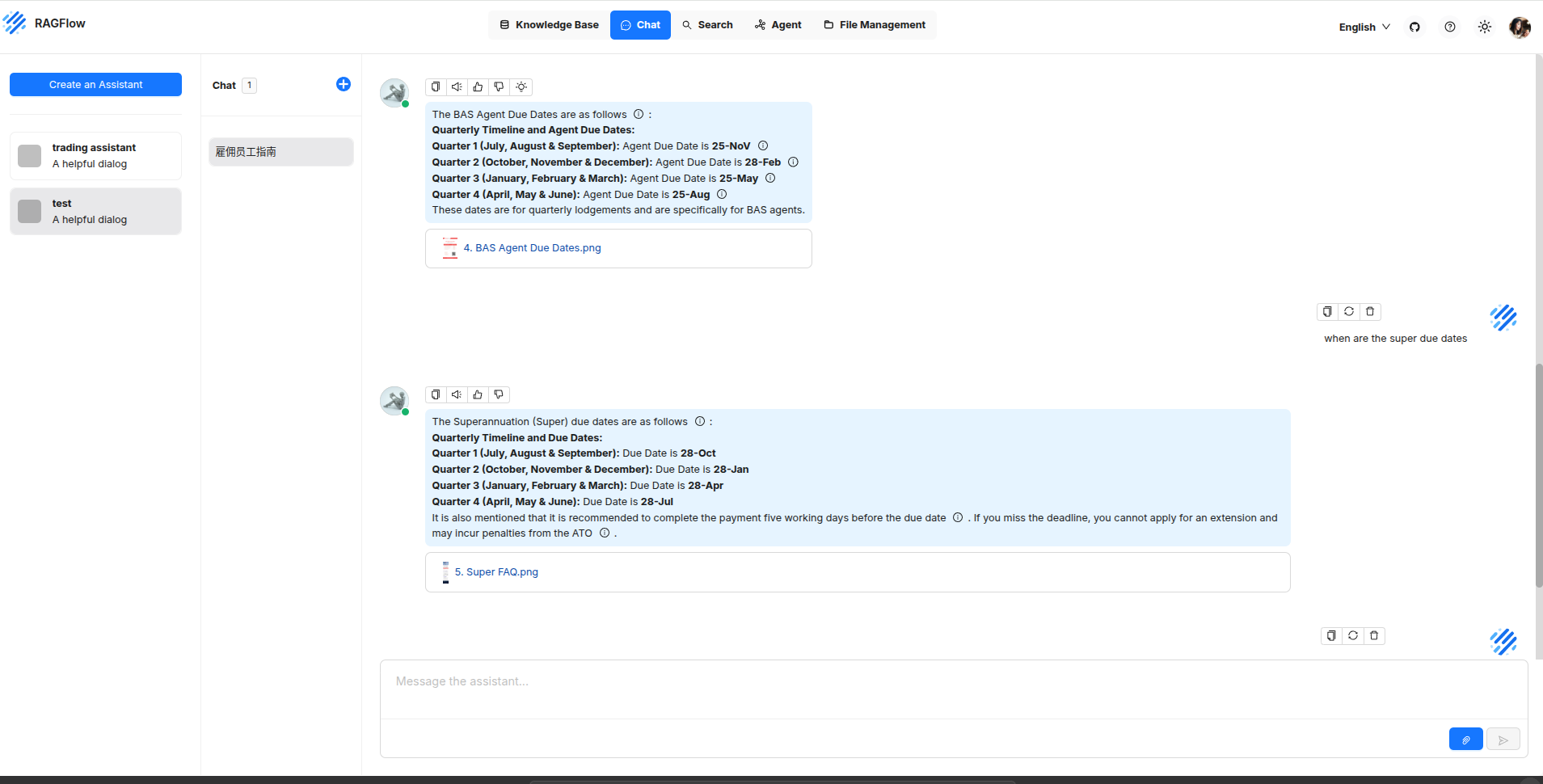

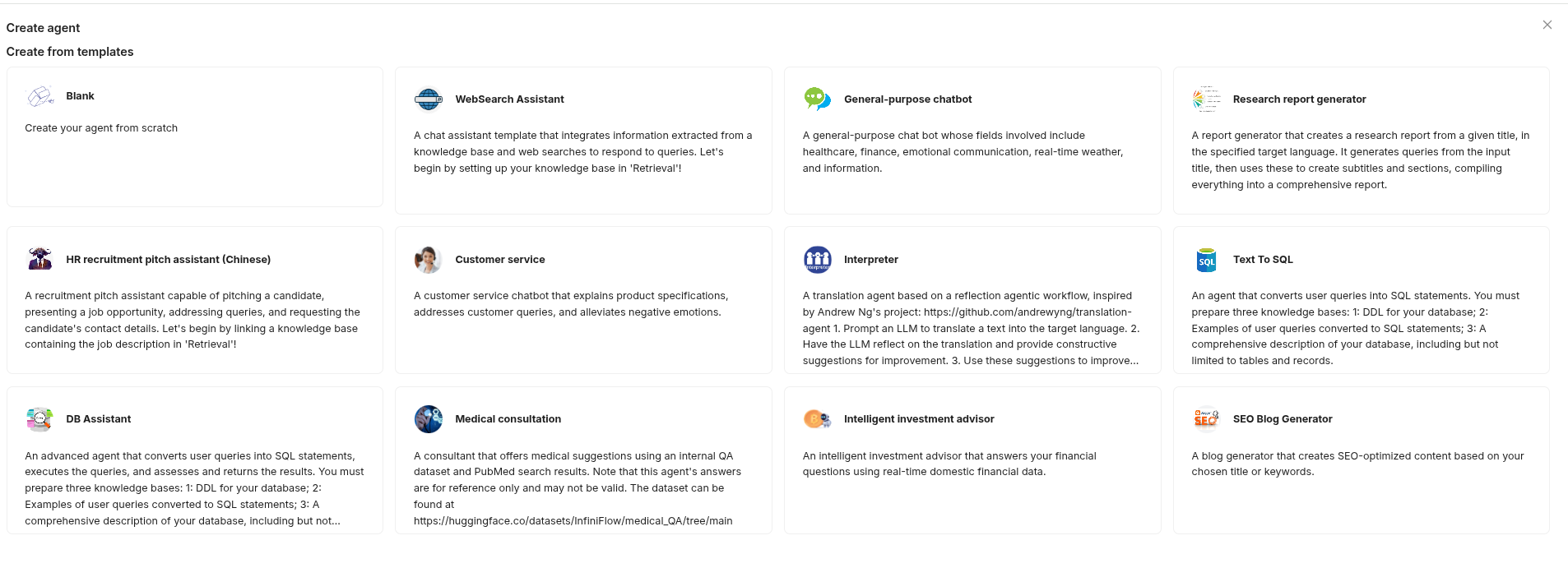

RagFlow

Description: RagFlow specializes in deep document understanding for accurate Q&A. It supports intelligent chunking, advanced parsing, and document visualization to enhance retrieval quality.

Supported Documents: Microsoft Office files, Images, PDFs, structured data, and web pages.

GitHub Repository: infiniflow/ragflow

Installation:

git clone https://github.com/infiniflow/ragflow.git

Usage:

cd ragflow

cd docker

docker compose up

To start the App: http://localhost

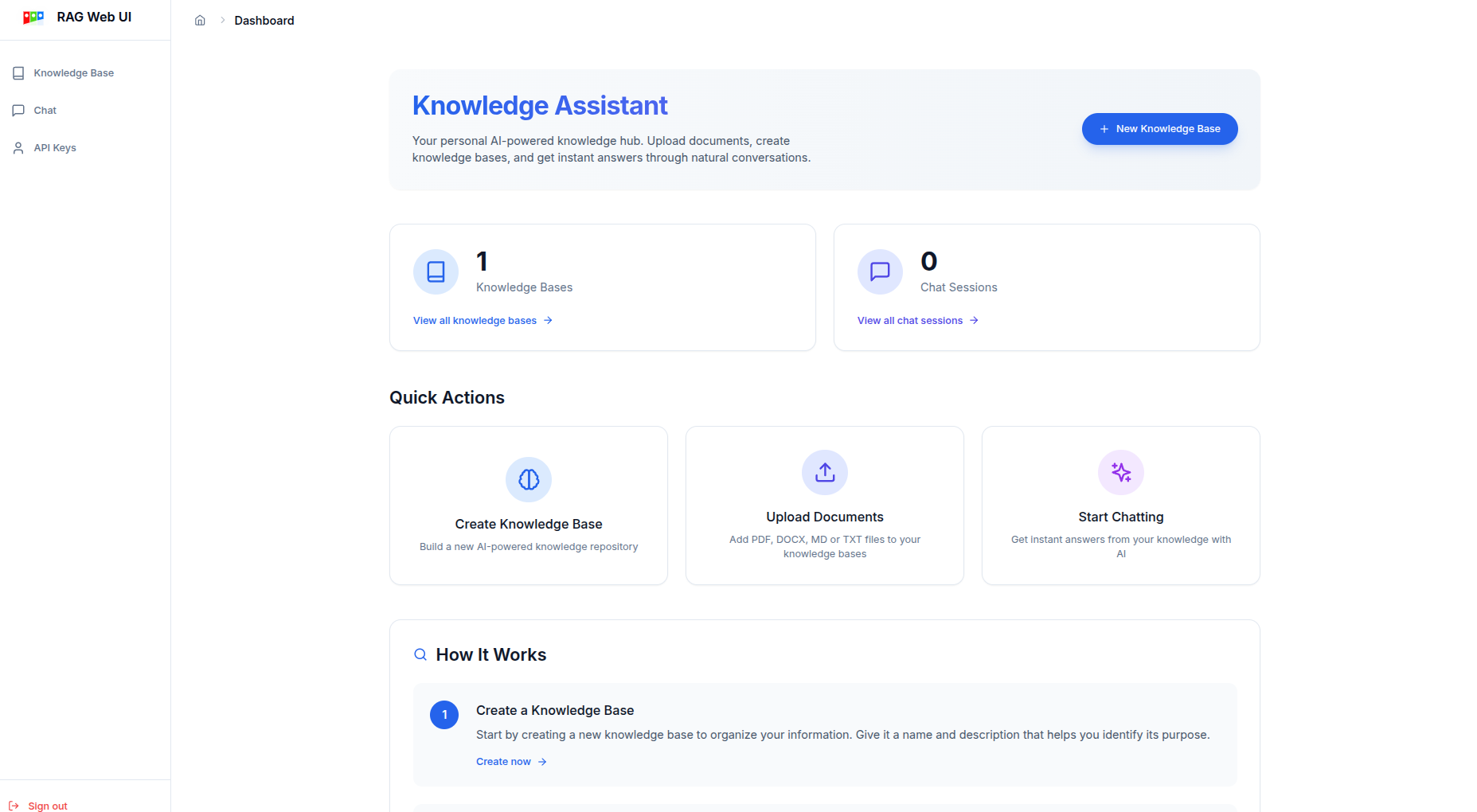

RAG Web UI

Description: RAG Web UI is an interactive chatbot system with support for various document formats. It offers a full-stack RAG implementation, making it easy to deploy knowledge-based assistants.

Supported Documents: PDF, Word, Markdown, and plain text.

GitHub Repository: rag-web-ui/rag-web-ui

Installation:

git clone https://github.com/rag-web-ui/rag-web-ui.git

cp .env.example .env

# Remember to edit the .env file to add your own open api key to OPENAI_API_KEY

Usage:

cd rag-web-ui

docker compose up --build

To start the App: http://localhost

One issue is that after creating a knowledge base, you can't change the name anymore. Also you won't be able to change the knowledge base for the chat. In order to use a different knowledge base, you need to create a new one.

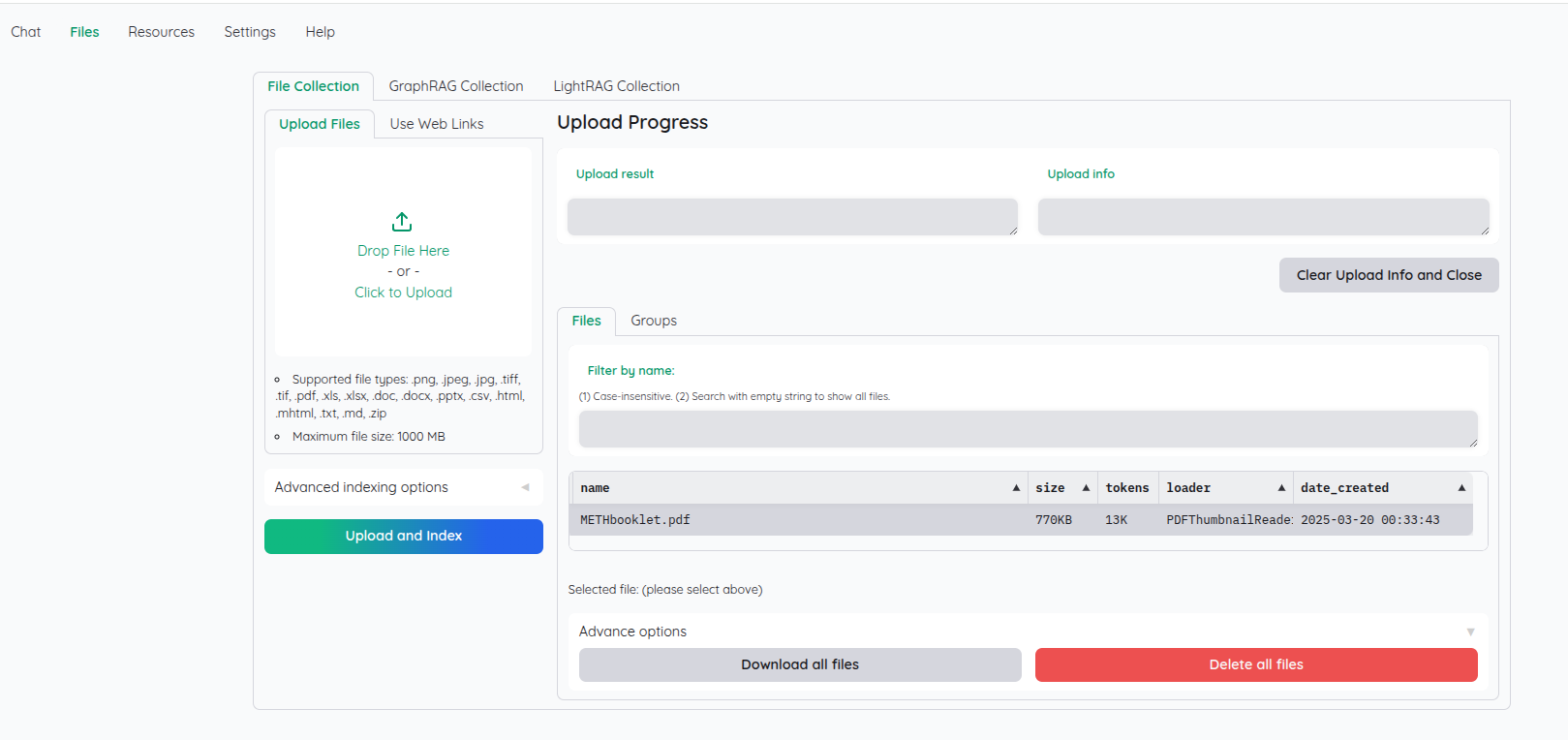

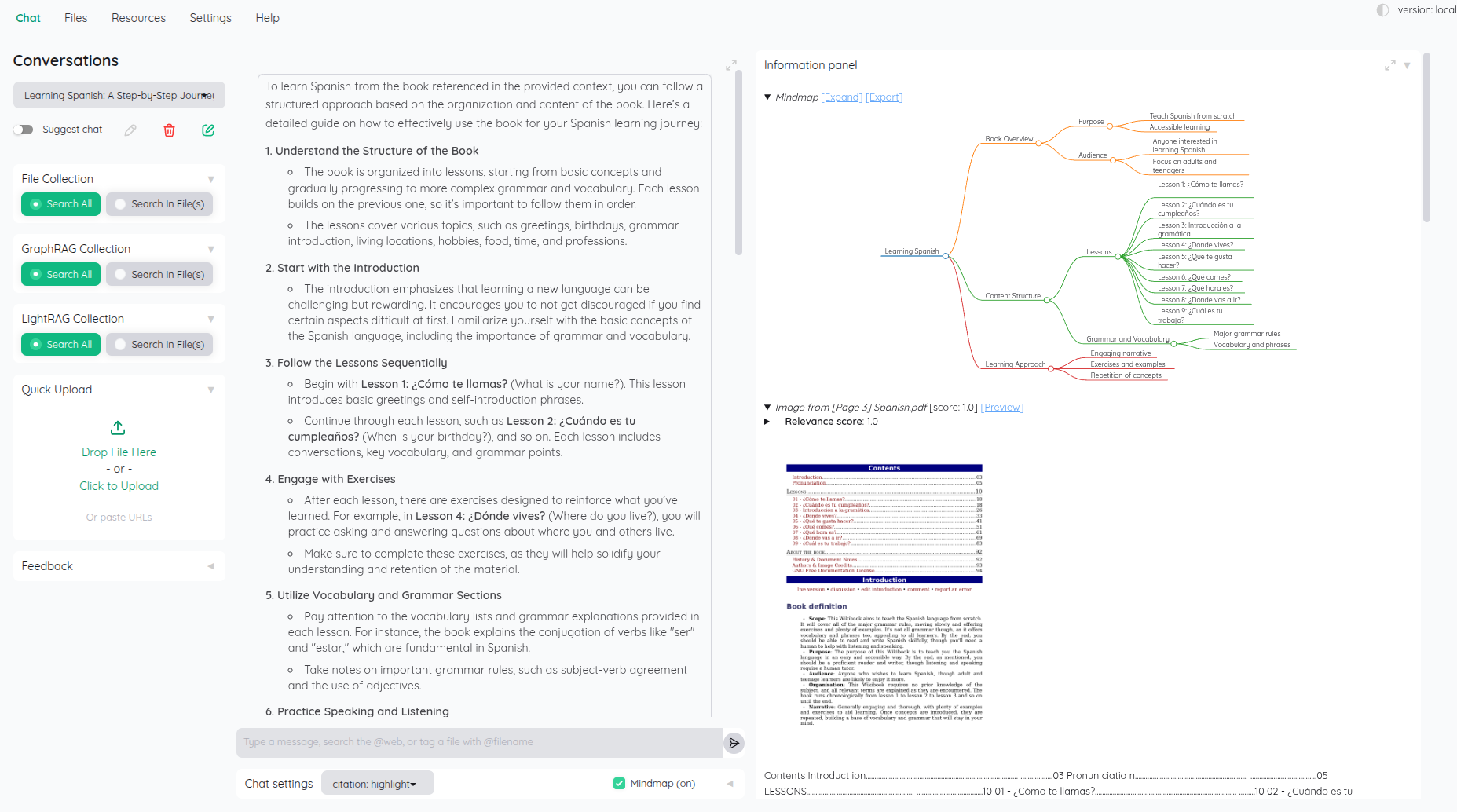

Kotaemon

Description: Kotaemon is a clean and customizable RAG web UI that supports multi-user document ingestion. It includes hybrid search, citation-based answers, and visual annotations.

Supported Documents: PDF, HTML, MHTML, and Excel. Extended support via Unstructured integration.

GitHub Repository: Cinnamon/kotaemon

Installation:

It would be best if you had Docker and Docker Compose installed on your machine. Then run the following commands:

mkdir Kotaemon

cd Kotaemon

git clone https://github.com/Cinnamon/kotaemon.git

mkdir docker-compose

cp code/.env.example docker-compose/.env

cd docker-compose

# Create a docker-compose.yml file

vim docker-compose.yml

Put the following content into docker-compose.yml

version: '3.8'

services:

kotaemon:

image: ghcr.io/cinnamon/kotaemon:main-lite

environment:

- GRADIO_SERVER_NAME=0.0.0.0

- GRADIO_SERVER_PORT=7860

ports:

- "7860:7860"

volumes:

- ./ktem_app_data:/app/ktem_app_data

stdin_open: true

tty: truek

restart: unless-stopped

Usage:

cd Kotaemon/docker-compose

docker-compose up

Then start the App: http://localhost:7860, and use 'admin' as the username and 'admin' as the password to login.

To be honest, Kotaemon is not particularly user-friendly, and it's not very easy to use. It is quite difficult to set up and configure properly. Initially I couldn't get it to work, but after some tweaking, I managed to get it to work. Rather than picking what model you want to use, you have to set a default model in the Resources (aka settings) tab, which is not very intuitive.

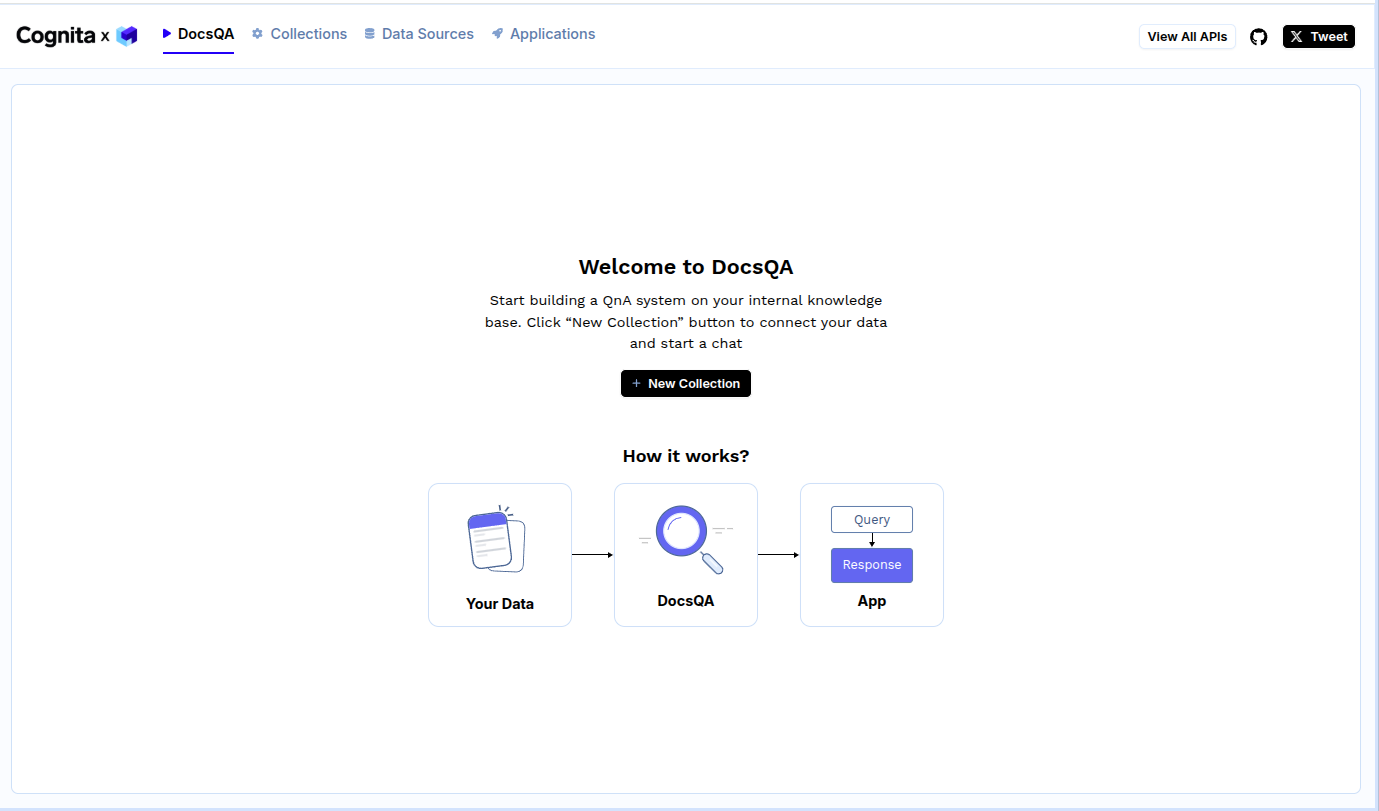

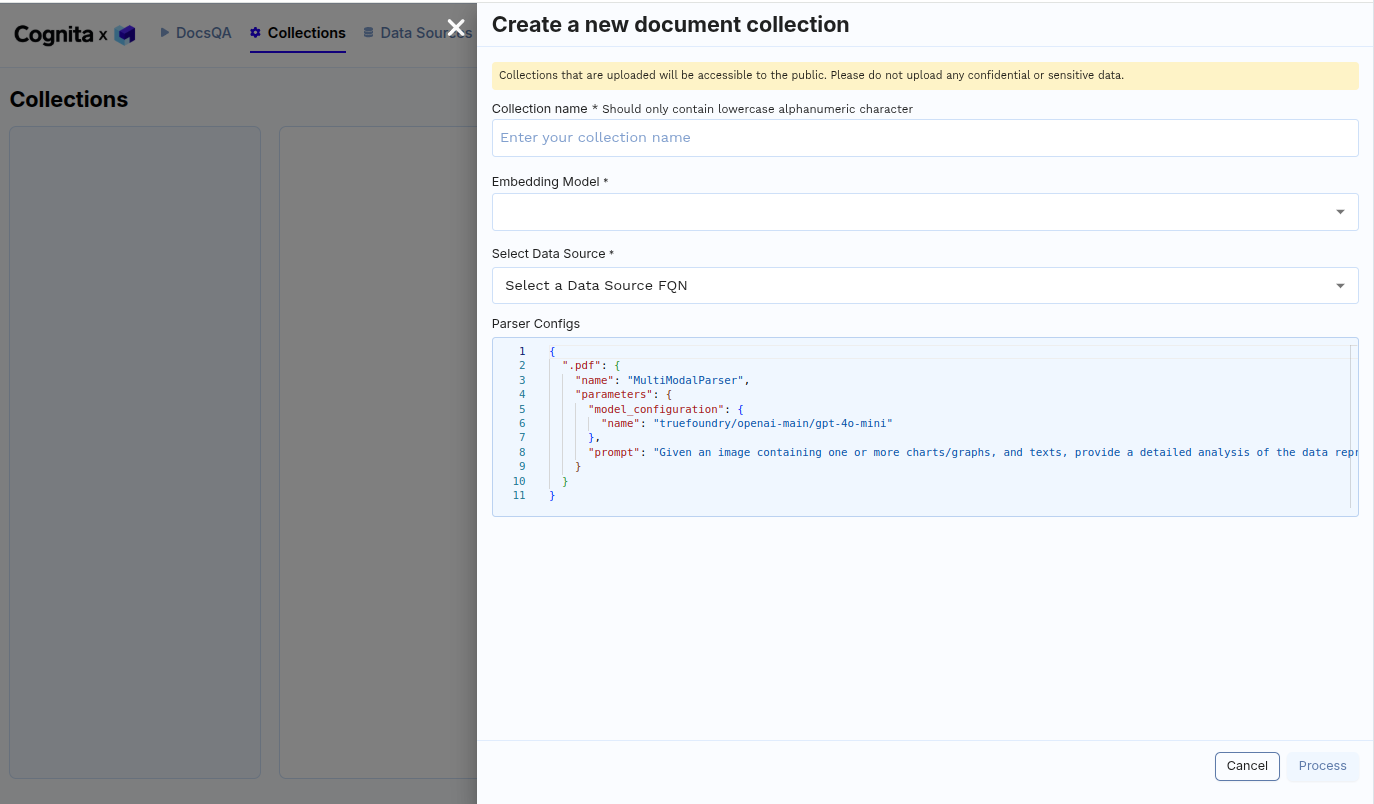

Cognita

Description: Cognita is a modular RAG framework designed for production-ready AI applications. It offers structured indexing, API-based retrieval, and multimodal support.

Supported Documents: PDFs, web pages, GitHub repositories, audio, video, and images.

GitHub Repository: truefoundry/cognita

Installation:

The easiest way to install Cognita is via Docker:

git clone https://github.com/truefoundry/cognita.git

cd cognita

cp models_config.sample.yaml models_config.yaml

Usage:

cd cognita

docker-compose --env-file compose.env up

Start to App: http://localhost:5001

Obvoiusly, Cognita does't really work out of the box, you need to configure it properly, and it's not very straightforward to do so.

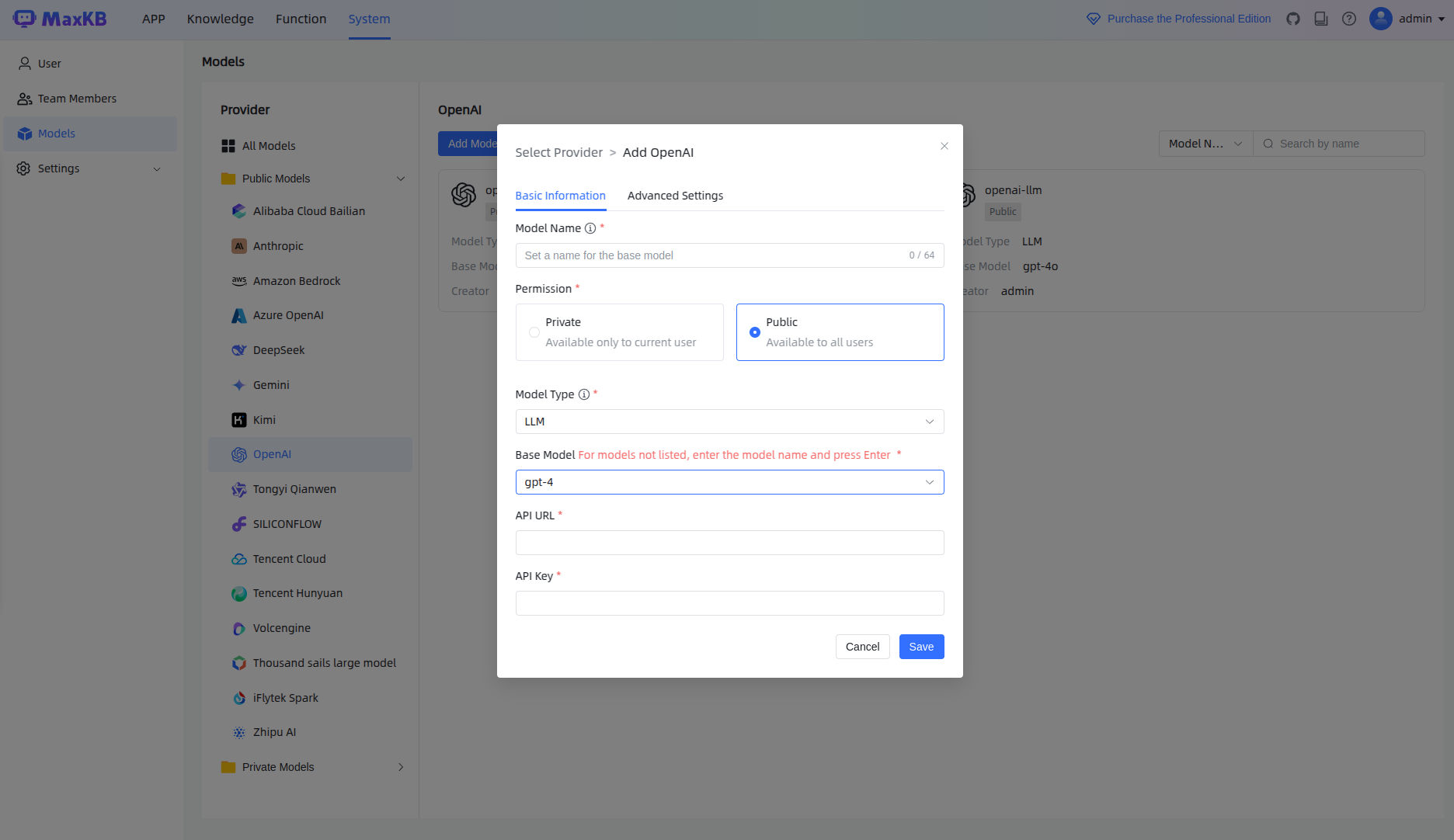

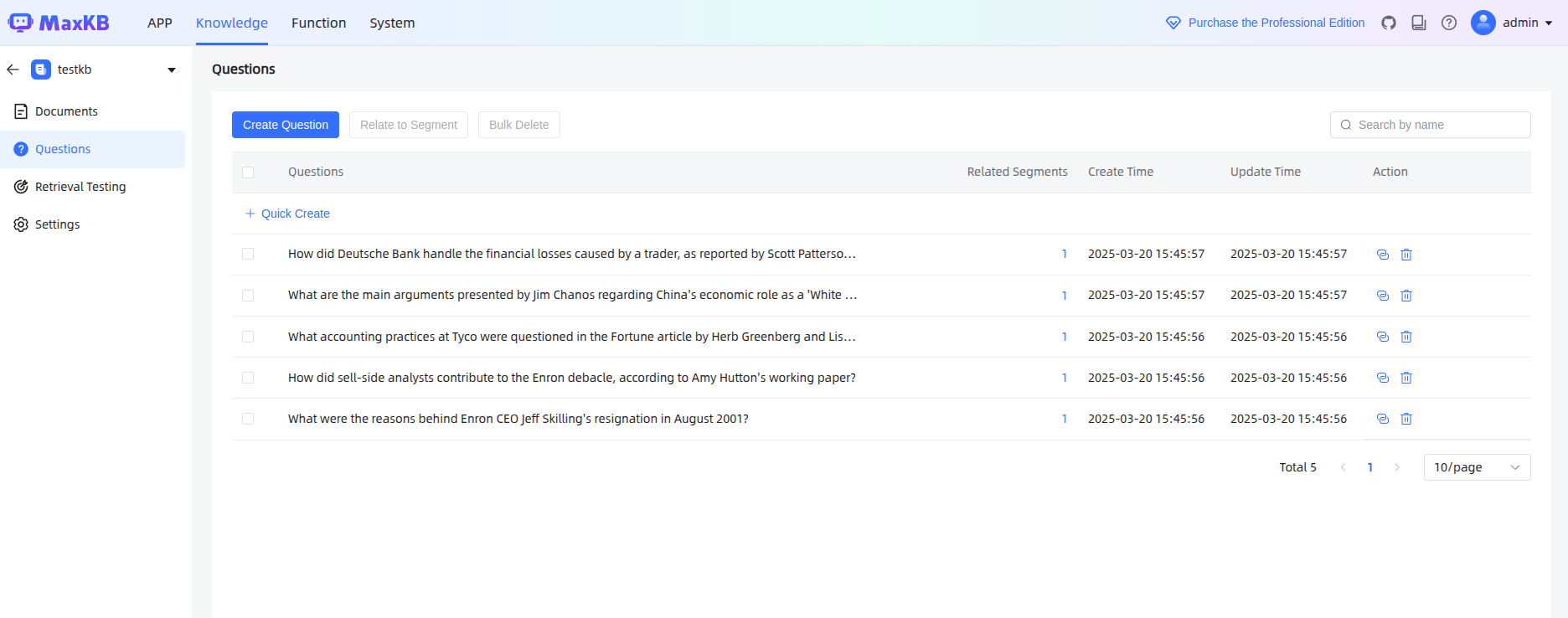

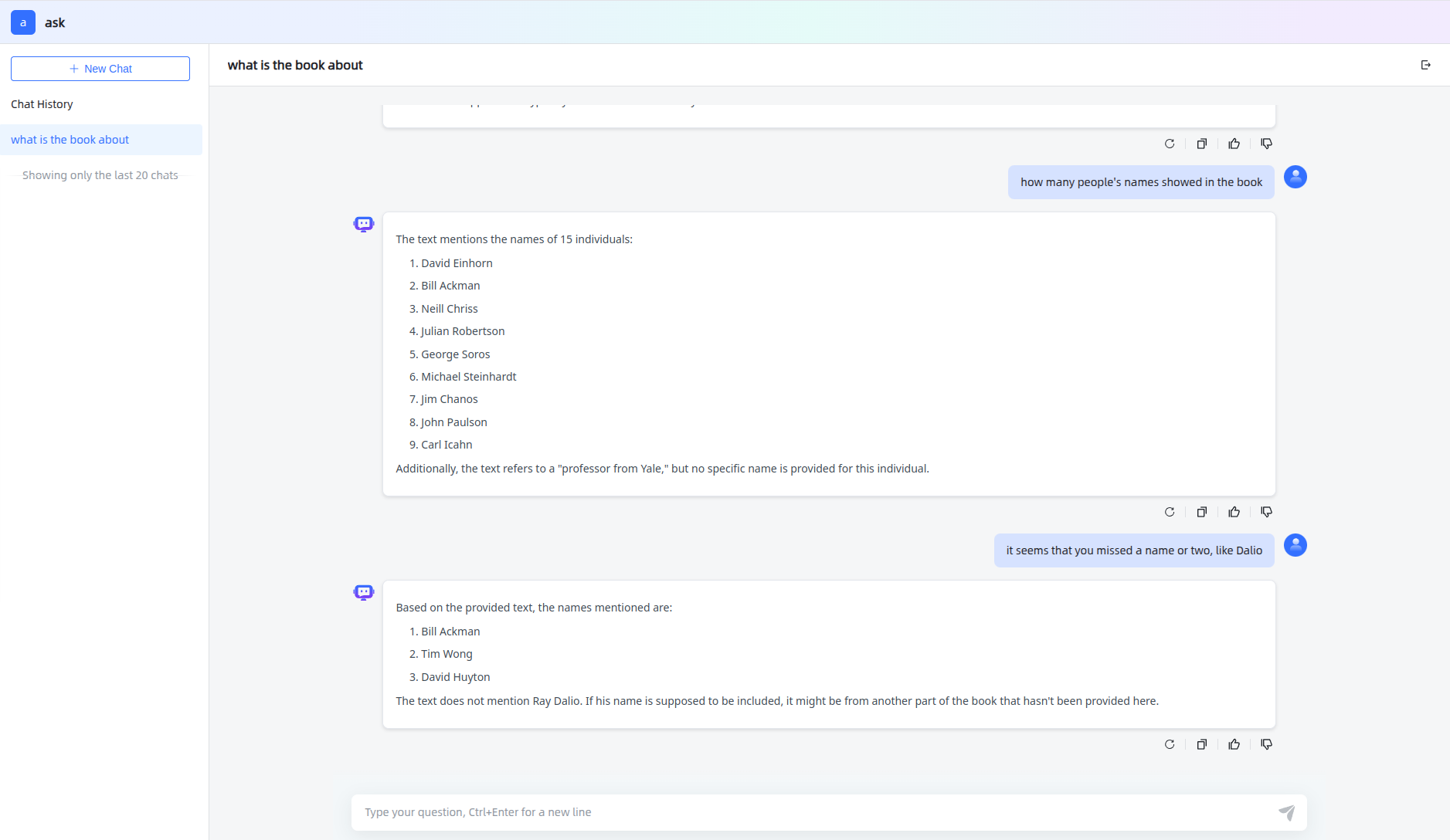

MaxKB

Description: MaxKB (Max Knowledge Base) is an open-source, ready-to-use RAG chatbot platform built on large language models (LLMs). It allows users to create a Q&A knowledge base by uploading documents or crawling web pages, with automatic text splitting and vector embedding to enable retrieval-augmented generation. MaxKB is model-agnostic, supporting various private and public LLMs (e.g., DeepSeek, Llama, Qwen, OpenAI’s GPT series, Claude, etc.). It also provides a workflow engine and integration features for orchestrating complex AI processes within existing systems.

Supported Documents: Markdown, plain text (TXT), Word documents (DOCX), PDFs, HTML, Excel spreadsheets (XLS/XLSX), and CSV files.

GitHub Repository: MaxKB on GitHub

Installation:

# git clone https://github.com/1Panel-dev/MaxKB.git

mkdir MaxKB

cd MaxKB

vim docker-compose.yml

# or use your favorite editor, e.g., nano, code, etc.

Put the following content into docker-compose.yml

version: '3.8'

services:

maxkb:

image: 1panel/maxkb

container_name: maxkb

ports:

- "8080:8080"

volumes:

- ~/.maxkb:/var/lib/postgresql/data

- ~/.python-packages:/opt/maxkb/app/sandbox/python-packages

restart: unless-stopped

Usage:

cd MaxKB

docker-compose up

Then start the App: http://localhost:8080

Login with the default credentials: admin and MaxKB@123...

Overall I would say the process of setting up models was not very pleasant but after that, it was quite easy to use.

Conclusion

Building a Retrieval-Augmented Generation system requires careful selection of tools based on the specific needs of an application. Open-source RAG frameworks provide a cost-effective and flexible way to integrate external knowledge retrieval with LLM-based interactions. Whether for personal knowledge assistants or enterprise AI solutions, tools like Open Web UI, Verba, Onyx, Cognita, RagFlow and others offer a robust foundation for implementing RAG workflows. By leveraging these tools, developers can create intelligent systems that enhance factual accuracy and contextual relevance in AI-generated responses.

Personally, I recommend both Onyx and RagFlow for their comprehensive feature sets. In particular, Onyx stands out with its wide range of connectors, making it easy to integrate documents from various sources and build your own knowledge base. However, each tool has its own strengths and weaknesses, so it is essential to evaluate them based on your specific requirements. With the right combination of tools and expertise, you can build a powerful RAG system that transforms how users interact with AI-powered assistants.

Comments (0)

Leave a Comment